Explains precision, recall, F1 and AUC to balance catching DM threats with avoiding public false positives, and covers dataset and multilingual challenges.

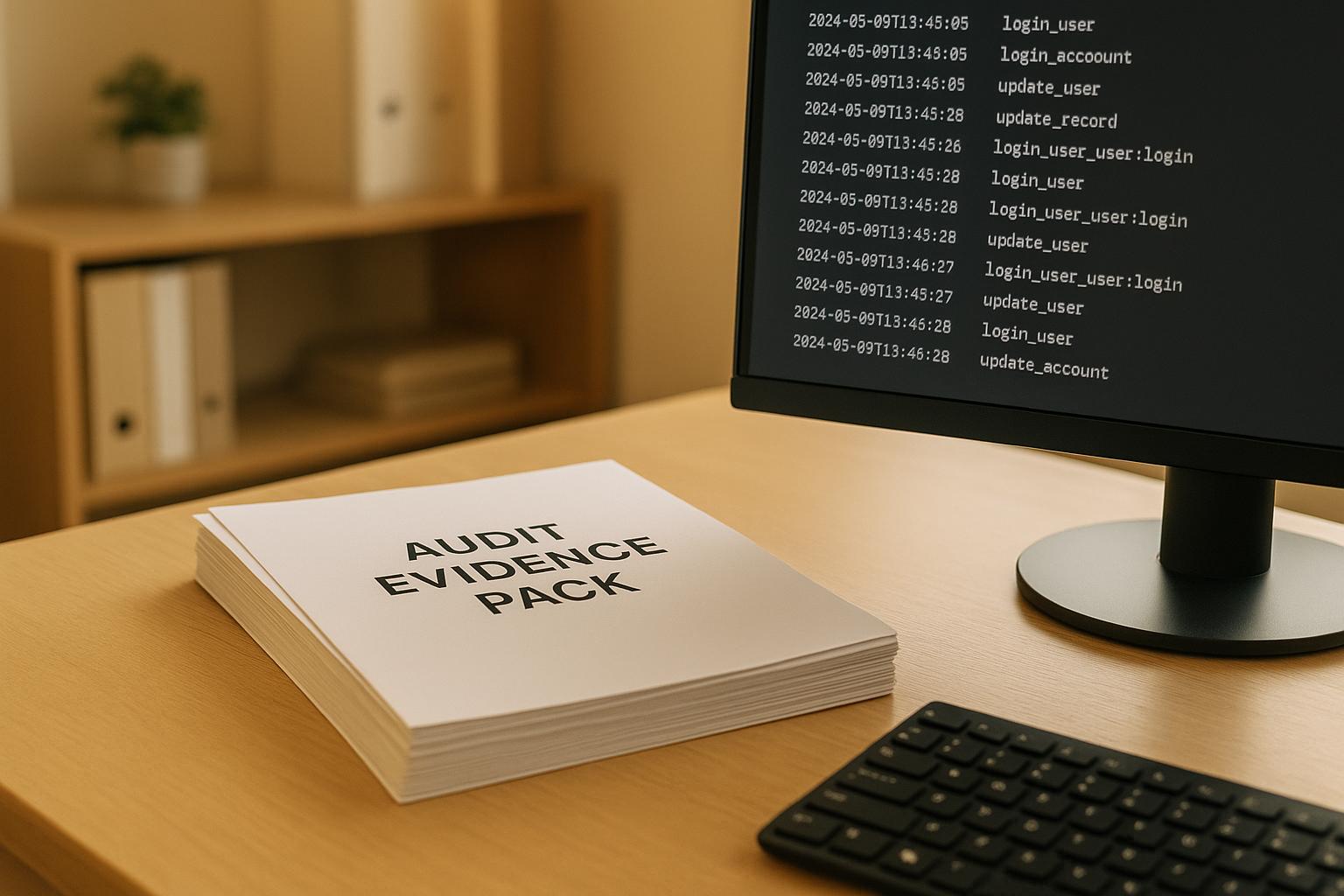

Meticulous audit evidence packs with raw logs, ISO 8601 timestamps, and clear chain-of-custody are essential to validate moderation decisions and speed audits.

AI flags threats, harassment, and coordinated attacks in social messages using outlier detection and classifiers across 40+ languages.

How biased data, cultural gaps, and feedback loops skew AI moderation—and practical fixes like diverse datasets, adversarial debiasing, XAI, and human review.

How AI moderation automates detection, audit logging, and multilingual DM monitoring to help platforms meet DSA, GDPR, and evolving U.S. laws.

How personalized federated learning tailors on-device AI moderation to reduce false positives, protect user privacy, and detect multilingual threats.

AI turns terabytes of digital evidence into searchable, court-ready case files while preserving privacy and chain-of-custody.

Explains how emojis are repurposed to hide bullying, grooming, and extremist signals—and why context-aware AI moderation is essential to spot harmful patterns.

Guide to building real-time moderation: clear rules, AI + human layers, escalation tiers, event-specific settings, multilingual support, and crisis protocols.

Automated real-time capture secures disappearing online abuse with timestamps, metadata, and tamper-proof logs to support safety teams, legal cases, and brand protection.