Audit Evidence Packs: What to Include

When handling moderation escalations, audit evidence packs are essential for documenting actions and ensuring compliance with legal and regulatory standards. These packs consolidate system logs, timestamps, moderation decisions, and supporting documents into a structured format. They serve as critical tools for legal teams, regulators, and safety managers to verify accountability and track incidents effectively.

Key Takeaways:

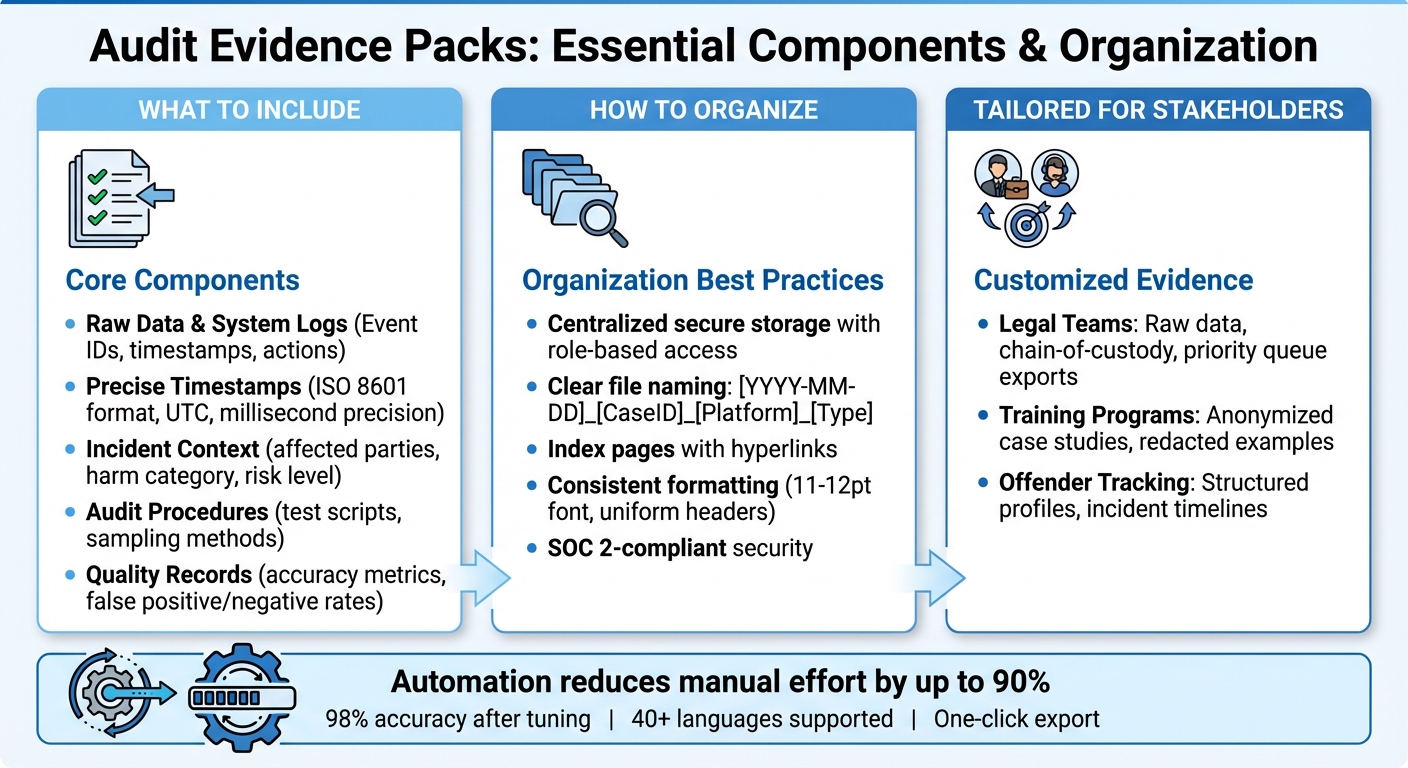

- Core Components: Include detailed system logs, precise timestamps (ISO 8601 format), incident context, and documented audit procedures.

- Organization: Use centralized, secure storage with role-based access, clear file naming conventions, and index pages for easy navigation.

- Tailored Evidence: Customize for stakeholders like legal teams (raw data, chain-of-custody), training programs (anonymized case studies), and offender tracking (structured profiles).

- Automation Benefits: Tools like Guardii reduce manual effort by up to 90%, ensuring high accuracy and readiness for audits.

By maintaining well-organized and thorough evidence packs, teams can streamline audits, support legal claims, and improve moderation processes while protecting athletes, creators, and brands.

Essential Components of Audit Evidence Packs for Moderation Compliance

ISA 230 Audit Documentation

What to Include in an Audit Evidence Pack

When preparing an audit evidence pack, it’s crucial to include detailed system data that demonstrates compliance and supports legal or regulatory reviews. Each component plays a specific role in showing proper procedures and decision-making, ensuring external auditors, legal teams, or regulators can fully understand the records without needing additional clarification.

Raw Data and System Logs

At the heart of any audit evidence pack are raw, unedited system logs. These logs should document every action - both automated and manual - performed by your moderation systems. This includes activities like auto-hiding comments, detecting threats in direct messages, escalating issues, and any manual overrides by human reviewers.

Each log entry should include structured fields for clarity and accuracy, allowing anyone to reconstruct events effortlessly. Key fields might include:

- Unique event ID

- Platform and content type

- Raw content (with necessary privacy redactions)

- Actor and target IDs

- Action taken and its source (AI, rules engine, human, or third-party)

- Reason codes and confidence scores

- Pre- and post-action statuses

Here’s an example of a detailed log entry:

"EventID=9834721; Platform=Instagram; ContentType=Comment; Content='[redacted slur]'; ActorID=HASH_abc123; TargetProfileID=Team_USA_Player_12; DetectedBy=Guardii_AI_v3.2; RiskCategory=Racist Abuse; ConfidenceScore=0.97; Action=Auto-Hide; ActionSource=AI; PreviousStatus=Visible; NewStatus=Hidden; CreatedAt=2025-06-10T19:04:23.512Z; ActionAt=2025-06-10T19:04:24.005Z; EscalatedTo=Safety_Tier2; EscalationAt=2025-06-10T19:05:10.220Z."

This level of detail provides a clear timeline of events, unlike a vague entry such as, "Comment removed – violation detected", which lacks critical specifics like timestamps, actors, or decision-making context.

Timestamps and Incident Context

Every piece of evidence must include highly precise timestamps. These timestamps should be recorded in Coordinated Universal Time (UTC) with at least second-level precision - ideally down to milliseconds - and formatted in ISO 8601 to avoid any confusion during international reviews. It’s essential to capture different timestamps for key moments, such as when content was posted, when an action was taken, and when human review occurred.

Incident context is equally important. It transforms raw data into a cohesive narrative by explaining the event, identifying affected parties (using compliant identifiers), categorizing the harm, assessing the risk level, outlining the escalation process, and detailing the response timeline and final outcomes.

Audit Procedures and Quality Records

To demonstrate that moderation controls are effective and consistently applied, include documented audit procedures and quality assurance records. This section should outline:

- Audit plans specifying the scope (e.g., "Instagram DM sexual harassment detection, Q2 sample")

- Sampling methods (random, risk-based, or stratified) and sample sizes

- Test scripts or checklists used to evaluate AI models, rules, and workflows

Additionally, include detailed results, such as false positive and false negative rates, accuracy metrics broken down by category or language, and evidence of periodic reviews. For example, platforms like Guardii, which handle moderation in over 40 languages, should include language-specific testing details and address any limitations or mitigation strategies for low-resource languages.

Records of model reviews and improvements are also essential. Include retraining logs, change approvals, and cross-check forms that verify consistency and identify potential biases. This level of documentation ensures transparency and builds trust in your moderation processes.

The next sections will explain how to structure this evidence to meet the specific needs of different stakeholders.

How to Organize and Document Evidence Packs

Once you've gathered system logs, timestamps, and quality records, the next step is ensuring that evidence is easy to access and review. Poor organization can slow audits and create unnecessary confusion. A clear structure and consistent formatting turn raw data into a polished, audit-ready resource. Here’s how to efficiently structure and present your evidence.

Centralized Storage with Controlled Access

All evidence files - such as logs, screenshots, reports, and exports - should be stored in a secure, centralized repository with role-based access. This could mean using a governance, risk, and compliance (GRC) platform or enterprise-grade cloud storage that includes SOC 2–compliant security, single sign-on (SSO), and multi-factor authentication. Organize evidence by audit cycle, control ID, or case ID into folders like: "2025 Q1 – Social Media Safety Audit – Case 001."

If you're using tools like Guardii to monitor online abuse, automate the export of audit logs and evidence directly into these repositories. This setup ensures that relevant teams - such as legal, safety, or brand management - can access the specific materials they need, like DM harassment cases or sponsor-related incidents, without exposing sensitive files to unauthorized users. By integrating automated monitoring with secure storage, evidence is consistently captured and categorized for efficient review.

Index Pages and Navigation Links

Build a front-page index that lists all evidence items with hyperlinks to their locations. This index, often created as a PDF or spreadsheet, should include key details such as:

- Item number

- Incident or case ID

- Date and time

- Platform or system

- Evidence type (e.g., log export, screenshot, DM transcript)

- Brief description

- File path or hyperlink

For larger evidence packs, consider adding filters for incident severity, business unit, or harm type. In digital formats, hyperlink every reference to an incident or case ID so reviewers can easily jump from summary findings to the corresponding evidence. For instance, an executive summary might include "Incident 2025-013 – high-severity racial abuse toward athlete", with a link to the folder containing comment exports, DM transcripts, and legal assessments. This approach is especially helpful for remote audits, where reviewers working across time zones need to quickly navigate large volumes of material.

Consistent Formatting and Labels

Standardize your file naming with a clear format like:

[YYYY-MM-DD][CaseID][Platform][EvidenceType][Version].[ext]

For example: 2025-03-15_CASE-013_Instagram_CommentLog_v2.pdf. Using ISO-style dates ensures chronological sorting and avoids confusion.

Maintain uniform formatting across all documents. Use a legible sans-serif font (11–12 pt), consistent header styles (H1 for summaries, H2 for evidence subsections), and uniform page layouts. Each incident summary should include a header block with key details like case ID, date/time, platform, reporter, classification (e.g., bullying, hate speech), and status. Screenshots and log excerpts should have captions that specify their source, extraction date/time, and relevance. This level of consistency makes it easier for safety teams, legal counsel, and auditors to quickly locate and understand key information without deciphering varying formats for each case.

sbb-itb-47c24b3

Tailoring Evidence Packs for Different Teams

Different stakeholders require different types of evidence, tailored to their specific needs. For instance, a legal team preparing for litigation will need detailed audit trails and raw data, while a training coordinator might only require anonymized case studies to protect privacy. The key is to customize evidence packs to provide exactly what's necessary, safeguarding sensitive information in the process. Below, we break down how to approach evidence customization for legal teams, training programs, and offender tracking.

Legal Teams and Incident Response

When it comes to legal counsel or incident response, evidence must meet the high standards required for court or regulatory proceedings. This means including:

- Priority queue exports that show how high-risk content was flagged and escalated.

- Multilingual harassment evidence with certified translations, especially for cases crossing international borders.

- Moderation records that document key actions, such as auto-hiding comments or quarantining threatening messages.

To ensure this evidence is admissible and verifiable, include a clear chain-of-custody record. This should detail how evidence was collected, exported, securely stored, and accessed, with timestamps for every step. These measures align with audit standards like PCAOB AS 1105, which requires sufficient, appropriate evidence for claims such as occurrence and rights.

Automation plays a crucial role here. For example, automated exports of priority queue records and logs of flagged direct messages help preserve suspicious content for potential law enforcement investigations. This ensures compliance across over 40 languages while maintaining platform integrity.

Next, let’s explore how evidence is adapted for training and brand safety purposes.

Training Programs and Brand Safety Reviews

For training and brand safety, evidence packs should focus on anonymized, instructive case studies. This involves redacting sensitive information like usernames and IP addresses, replacing real images with blurred placeholders or aggregated summaries (e.g., "10 similar toxic comments hidden"). Each case study should present:

- A description of the incident.

- Redacted excerpts or screenshots.

- The detected risk category (e.g., hate speech, sexual harassment, self-harm).

- Actions taken in response.

For example, a case study might highlight how an athlete's Instagram account received 50 toxic direct messages in Spanish, with 48 of them auto-quarantined and translated. Another could showcase a real-time moderation scenario with a two-hour response time. These examples demonstrate how protective measures safeguard sponsorships and uphold organizational reputation without exposing individuals.

Including metrics over time - such as the percentage of severe incidents auto-moderated versus manually reviewed or the number of threats blocked - further illustrates the effectiveness of safety measures. As noted in Guardii's 2024 Child Safety Report:

"The research clearly shows that preventative measures are critical. By the time law enforcement gets involved, the damage has often already been done".

Such anonymized evidence packs are invaluable for training new moderators, community managers, and PR teams. They help staff recognize and handle borderline cases while reinforcing brand safety protocols.

Finally, tracking repeat offenders adds another layer of proactive risk management.

Repeat-Offender Tracking

To monitor repeat offenders effectively, create structured profiles that include unique identifiers, incident timelines, severity ratings, action histories, and current status. These profiles should document behavior patterns, such as timing, targeted individuals, and recurring indicators, to help refine keyword models and inform preventive strategies.

For example:

- User XXX: 3 violations (12/01/2024 to 01/15/2025), repeated harassment of female athletes. Actions taken: quarantine and permanent ban.

Where permitted, track linkage indicators like similar usernames or overlapping device fingerprints to detect ban evasion. Establish clear escalation criteria - for instance, "after three severe incidents, escalate to legal or law enforcement" - to ensure fairness and consistency while minimizing bias claims.

For legal purposes, maintain a chronological dossier for repeat offenders. This dossier can be exported as a single evidence pack if legal action becomes necessary. Use tables with columns for User ID, Incident Date (MM/DD/YYYY), Violation Type, Action Taken, and Pattern Notes. Visual aids like timelines or frequency graphs can make these records easier to analyze.

How Guardii Automates Evidence Pack Creation

Guardii simplifies the process of creating structured, audit-ready evidence, addressing the challenges of manual record-keeping. For sports organizations dealing with thousands of comments and DMs daily, manually documenting incidents is not just time-consuming - it’s nearly impossible to sustain. Guardii steps in by automating the entire process, cutting preparation time by up to 90%. Beyond collecting evidence, it also organizes and exports audit logs with ease, making the process both efficient and accurate.

Automated Logs and Export Options

Every moderation action is meticulously recorded by Guardii, capturing details like timestamps, user IDs, content snippets, detected languages, risk categories (e.g., hate speech, sexual harassment, threats), and the actions taken. This creates a clear and detailed record of who did what, when, and why.

With just one click, users can export comprehensive audit trails and evidence packs. These include indexed logs, screenshots, and metadata in formats like PDF, CSV, or ZIP, ready for immediate use in legal or compliance reviews. For example, a club managing 1,000 daily comments can compile a week’s worth of evidence in seconds. Early adopters have reported saving up to 95% of the time typically spent on moderation documentation. In one case, an NBA influencer used a 30-day evidence pack - spanning over 500 entries in 15 languages - to successfully support a restraining order filing. This process integrates seamlessly with multilingual compliance needs.

Platform-Compliant Moderation in 40+ Languages

Guardii takes compliance logging a step further by ensuring every moderation decision is documented and aligned with legal and audit requirements. It strictly follows Meta’s community standards, using approved Instagram APIs to auto-hide toxic comments instead of deleting them. Each action is logged with compliance flags and references to relevant rules. Evidence packs include side-by-side comparisons of the original content, detection scores, and Meta guideline citations, ensuring transparency.

Moderation spans over 40 languages, supporting consistent global standards. For instance, a U.S.-based soccer club can manage Spanish-language threats from Latin America or Arabic DMs while exporting multilingual logs complete with translations for legal reviews.

Accuracy Tuning and Stakeholder Protection

Guardii offers organizations the ability to fine-tune detection thresholds through a user-friendly dashboard. Teams can adjust sensitivity for specific categories, reducing false positives - such as misclassifying playful banter - by 20–30%, while still maintaining high accuracy for critical risks like explicit threats or harassment. Confidence scores (e.g., 92% threat probability) are included in exports, providing clear evidence of the system’s calibrated performance.

High-risk cases are flagged for human review, ensuring that automated decisions don’t override critical judgment. These reviews are also logged, adding another layer of accountability to the evidence pack. This balanced approach protects athletes, creators, and sponsors while maintaining a robust record for potential legal needs. Compliance audits have confirmed Guardii’s adherence to Meta’s standards, with accuracy rates exceeding 98% after tuning.

Summary

Organized evidence packs play a key role in ensuring compliance and supporting legal defense. By including raw data, accurate timestamps, and consistent formatting, teams can quickly verify incidents and establish timelines. According to PCAOB standards, audit evidence must be both complete and dependable.

However, manual evidence collection can be overwhelming. For organizations managing compliance frameworks like SOC 2 or ISO 27001, audits often require hundreds of individual evidence items. This makes manual collection a time-consuming and inefficient process. Automated systems offer a solution, cutting evidence collection time by 50–80% compared to manual methods. These systems ensure thoroughness by continuously capturing logs, screenshots, and metadata on a scheduled basis. This not only saves time but also enhances accuracy and reliability.

For teams focused on online safety and brand protection, tools like Guardii eliminate the need for manually documenting thousands of daily interactions, such as comments and direct messages. These systems automatically log key details - timestamps, user IDs, languages, risk categories, and compliance flags - creating audit-ready evidence packs. With a single click, teams can export indexed logs and metadata in a format that compliance and legal teams can immediately use.

The move toward continuous evidence collection further improves readiness for unplanned audits and regulatory inquiries. Automated systems maintain ongoing, comprehensive records that can be exported whenever needed. This ensures that essential data, from system logs to moderation actions, is accurately captured and readily accessible.

FAQs

How do automation tools like Guardii simplify creating audit evidence packs?

Automation tools, such as Guardii, simplify the process of creating audit-ready evidence packs. They automatically detect, isolate, and securely store harmful or suspicious content, cutting down on manual effort while making sure all essential information is properly recorded and easily accessible.

Equipped with features like real-time threat detection and detailed logging, these tools enhance compliance, strengthen legal preparedness, and boost overall safety. They also enable teams to respond more quickly and efficiently, minimizing risks and helping maintain a secure environment.

What steps can organizations take to keep their audit evidence packs secure and easy to access?

To keep audit evidence packs both secure and easily accessible, organizations should rely on secure digital storage systems. Features like encryption, role-based access controls, and audit trails are essential. These tools not only protect sensitive information but also ensure that authorized personnel can access the data when required.

Using cloud-based platforms with multi-factor authentication and routine backups adds another layer of protection against unauthorized access and potential data loss. Additionally, implementing version control is critical to preserve the integrity of the evidence, making it easier to comply with regulations and handle legal or regulatory reviews efficiently.

How should evidence packs be tailored for legal teams versus training purposes?

When creating evidence packs for legal teams, the emphasis should be on delivering thorough and verified documentation. This means including records of threats, flagged content, actions taken, and detailed audit logs. Adding contextual analysis can further support compliance and enforcement efforts, ensuring the legal team has a clear and complete picture.

For training purposes, evidence packs take on a different role. These are tailored to educate staff by incorporating real-world examples, practical best practices, and scenario-based materials. The aim is to help teams identify patterns, respond appropriately, and minimize false positives. By focusing on actionable insights, these packs can strengthen understanding and improve prevention strategies.