Real-Time Moderation: Best Practices

Real-time moderation ensures online spaces are safe, especially during live events or high-risk scenarios. By combining automated tools and human oversight, harmful content like threats, harassment, and hate speech can be addressed immediately. Here’s how to build an effective system:

- Clear Rules: Define specific prohibited behaviors (e.g., hate speech, threats, spam) and document them in a searchable playbook.

- Escalation Tiers: Categorize risks (low, medium, high) with response times and assigned teams.

- Layered Moderation: Use AI to filter content at scale, human moderators for complex cases, and user reporting as a safety net.

- Event-Specific Adjustments: Tailor rules for live events, sensitive audiences, or multilingual content.

- Performance Tracking: Monitor response times, accuracy, and community health metrics.

- Crisis Management: Prepare for emergencies like coordinated attacks or doxxing with clear escalation protocols.

- Moderator Well-being: Protect mental health with shift rotations, breaks, and support tools.

For example, tools like Guardii auto-hide toxic comments, detect DM threats, and provide evidence logs, ensuring safety while maintaining user engagement. Regular audits and updates keep systems effective against evolving threats. Moderation is not just about removing harmful content - it’s about creating trust and protecting communities.

The Tale of AI Chat Moderation | AWS Tech Tales | S4 E15

Setting Up Safety Rules and Escalation Procedures

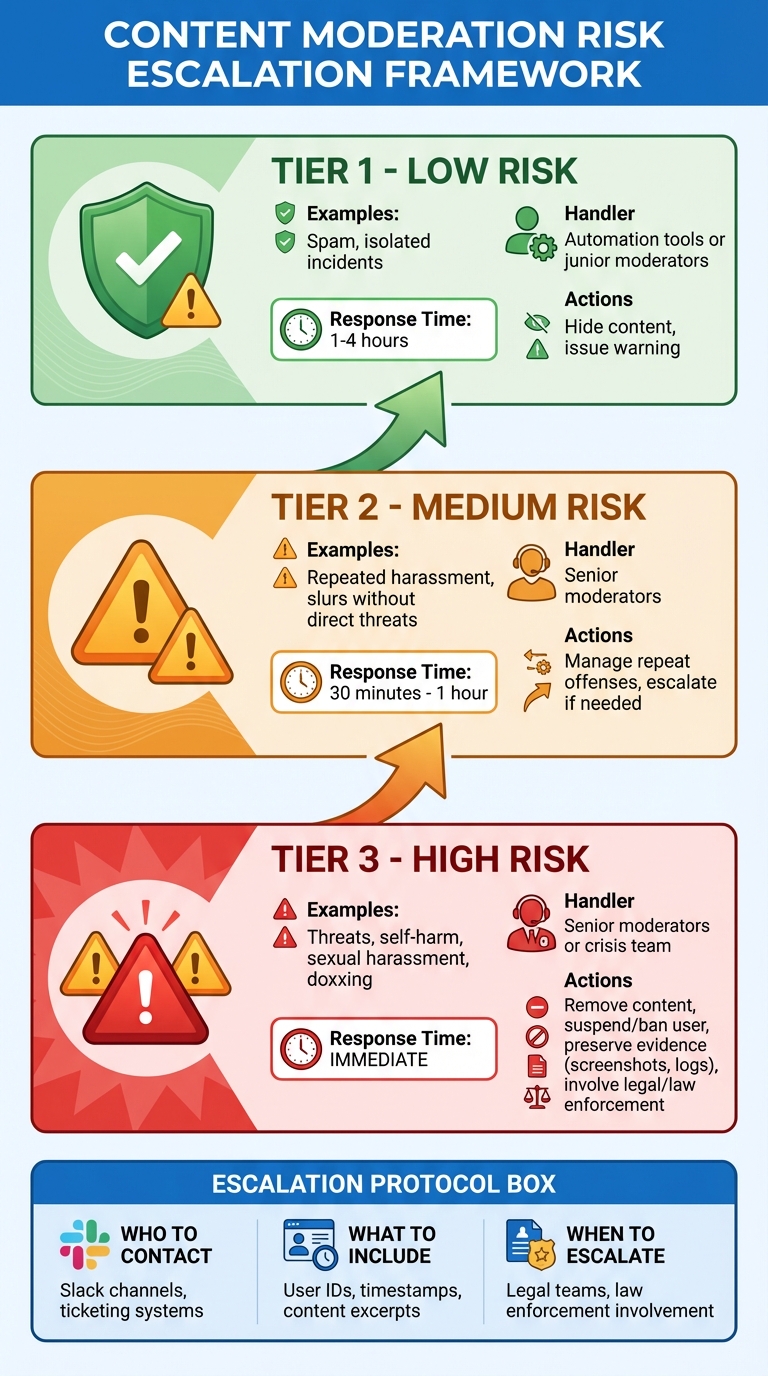

3-Tier Risk Escalation Framework for Real-Time Content Moderation

Defining Prohibited Content and Behaviors

Start by establishing clear, detailed rules that outline what content and behaviors are not allowed. Avoid vague statements like "be respectful" and instead provide specific examples that moderators can easily identify. Key areas to address include:

- Hate speech and discrimination: Any attacks targeting race, ethnicity, religion, gender, or sexual orientation.

- Threats and incitement to violence: Direct or indirect threats of harm.

- Sexual harassment: Unwanted advances, explicit messages, or suggestive language.

- Harassment and bullying: Repeated, targeted attacks or coordinated campaigns.

- Spam and scams: Phishing links, fake giveaways, or impersonation attempts.

Rules should be written in plain, straightforward language with concrete examples. For instance, a threat might look like, "I know where you live and I'm coming for you," while sexual harassment could involve repeated messages such as, "You're so hot, send me naked pics," or even subtle actions like sending suggestive emojis repeatedly. Moderators also need to be aware of tactics like "algospeak", where users use coded language to bypass filters, such as replacing "sex" with "s3x."

All guidelines should be compiled into a searchable moderation playbook. This playbook should include decision trees for common scenarios, helping moderators respond quickly and effectively during live interactions.

Once rules are defined, the next step is to create escalation procedures tailored to different levels of risk.

Creating Escalation Procedures for Different Risk Levels

Not all violations require the same level of urgency. To handle incidents effectively, categorize them into three risk levels, each with specific criteria, response times, and assigned responsibilities:

- Low-risk cases: These include isolated incidents like spam. They can be addressed within 1–4 hours by automation tools or junior moderators. Typical actions might involve hiding the content or issuing a warning.

- Medium-risk cases: Examples include repeated harassment or the use of slurs without direct threats. These require attention within 30 minutes to 1 hour, typically handled by senior moderators who can manage repeat offenses.

- High-risk cases: These involve serious issues like threats, self-harm, sexual harassment, or doxxing. Such cases demand immediate action. Senior moderators or a dedicated crisis team should remove the content, suspend or ban the user, and preserve evidence such as screenshots and logs.

An escalation matrix should clearly outline:

- Who to contact: Use designated Slack channels, ticketing systems, or other communication tools.

- What information to include: Provide user IDs, timestamps, and content excerpts.

- When to involve legal teams or law enforcement: Escalate high-risk situations as needed.

To ensure readiness, conduct regular drills to test these procedures. High-stakes events, such as live sports or breaking news, leave no room for uncertainty about roles and responsibilities. Having a well-practiced system in place ensures that your team can act decisively when it matters most.

Building a Multi-Layer Moderation System

Expanding on established safety protocols and escalation processes, a multi-layer moderation system enhances real-time content management. The most effective setups combine three key layers: automated AI filtering, human review, and user reporting. Each layer plays a specific role. AI rapidly processes massive amounts of content to catch clear violations like slurs or spam. Human moderators step in to handle more nuanced cases that require judgment and context. Meanwhile, user reporting acts as a safety net, flagging issues that might slip through the cracks, ensuring the system adapts to the unique needs of your community. Together, these layers work seamlessly with escalation procedures to provide thorough oversight during live interactions.

This approach strikes a balance between speed and accuracy. For instance, major platforms rely on both AI tools and tens of thousands of human moderators because neither can handle every aspect of moderation alone. AI manages the overwhelming volume of content, while human moderators address complex cases, reducing false positives and accounting for cultural subtleties. This ensures moderation is both efficient and thoughtful.

Using AI for Automated Content Filtering

AI serves as the first line of defense, scanning comments, messages, and posts in real time. These systems analyze text for harmful patterns, such as slurs, threats, or spam links. They can also flag suspicious behaviors, like coordinated mass commenting, that may signal larger issues. Beyond text, AI reviews images and videos for explicit content, violence, or other graphic material - all while operating 24/7 and processing thousands of items per second.

To maximize efficiency, configure AI filters to hide or quarantine high-confidence violations automatically. For instance, during a high-profile sports event, AI can filter out thousands of toxic comments in real time, allowing your team to focus on more complex cases. A great example of this is Guardii’s solution, which auto-hides toxic comments and detects direct message threats in over 40 languages on Instagram, all while aligning with Meta’s compliance standards.

However, AI has its limitations. It often struggles with sarcasm, cultural nuances, and evolving coded language. For example, a seemingly polite message could carry an underlying tone of harassment. In such cases, human moderators are essential to provide the context and judgment AI lacks.

Adding Human Review for Context-Dependent Decisions

Human moderators play a critical role in interpreting context and handling the gray areas that automated systems can’t address. They review flagged content, using tools like conversation threads, user histories, and records of past violations to ensure fair and balanced decisions. For example, a comment that appears aggressive in isolation might be part of a consensual debate, while a seemingly benign remark could be part of a pattern of targeted harassment.

The right tools make this process more effective. Moderation dashboards should display key information such as user reputation scores, conversation histories, timestamps, and clear escalation workflows. Decision trees and response templates help maintain consistency across the team. Additionally, supporting moderators with regular breaks and mental health resources is crucial to sustain their well-being, enabling them to focus on the most challenging cases.

Setting Up User Reporting and Feedback Systems

User reporting provides a crucial third layer of moderation, helping to catch issues that may evade both AI and human reviewers. Every comment or message should include an accessible "Report" option with clear categories like harassment, spam, threats, or explicit content. To minimize frivolous reports, require users to select a reason when submitting their concerns.

Once reports are submitted, triage them through AI. Obvious cases, such as spam, can be automatically dismissed, while serious concerns - like threats or harassment - are escalated to human moderators. This ensures that the moderation team isn’t overwhelmed by minor issues while critical matters receive immediate attention. Providing feedback to users about the outcomes of their reports builds trust and reinforces the idea that their concerns are taken seriously.

Guardii supports this process with features like priority and quarantine queues, evidence packs, and audit logs. These tools allow safety and legal teams to document decisions in a consistent and defensible manner. By integrating these layers, you create a strong foundation for managing real-time crises effectively in future scenarios.

Context-Aware Moderation Techniques

Context-aware moderation tailors its approach based on specific situations rather than applying uniform rules across the board. By adjusting thresholds and policies to fit the event type, audience, language, and risk level, this method reduces false positives while effectively identifying harmful content. For example, playful banter between adult sports fans during a live game might be acceptable, but the same language in a youth-focused community or during a sensitive event would be inappropriate. Creating distinct rule sets for different contexts allows platforms to maintain safety without unnecessarily stifling genuine interactions. Let’s dive into how moderation rules can be fine-tuned for specific events and audiences.

The shift from static to dynamic moderation rules has become a common practice. Platforms often prepare "event modes" for high-risk scenarios like live sports, product launches, or breaking news. These modes temporarily heighten sensitivity to slurs, threats, and harassment. Once the event ends, settings return to normal, avoiding over-moderation and keeping the system responsive to real-world dynamics.

Adjusting Rules for Specific Events and Audiences

Different events and audiences come with unique challenges, so moderation policies should reflect those differences. Start by creating risk profiles for each type of event. A live championship game, for instance, involves high emotional stakes, heavy activity, and significant exposure for brands, requiring stricter filters for hate speech, threats, and abuse during the event. On the other hand, a routine Q&A session might benefit from more relaxed settings to encourage open participation.

Platforms can implement event-specific rule sets activated within designated timeframes. For example, sports brands often increase moderation around referees and athletes on game day by temporarily blocking specific keywords and expediting review processes. Similarly, retail brands might enhance spam and scam filters ahead of major sales events, setting up dedicated dashboards to help moderators focus on critical streams.

Audience segmentation is another vital aspect. Communities involving minors demand stricter rules for nudity, sexual content, grooming, and unsolicited contact. Public figures like athletes, influencers, and journalists may need extra protection against large-scale pile-ons, hate speech, and doxxing. In such cases, concerning content can be flagged for priority review and faster escalation. Vulnerable groups, such as users discussing mental health, may require sensitive handling, where flagged content is routed for supportive intervention instead of immediate removal. Many platforms categorize users into tiers - such as "general", "protected", or "VIP" - and apply tailored rules accordingly. For instance, Guardii offers customized protections for athletes, creators, and families on Instagram by automatically hiding toxic comments and prioritizing threats for expert review across multiple languages.

Handling Multilingual Content

Moderation becomes even more complex when dealing with multilingual content, as linguistic nuances vary widely across regions. A joke in one language might be deeply offensive in another. This is where region-specific AI models come into play, trained to understand local expressions, creative spellings, and coded threats that generic keyword lists might overlook.

The process starts with language detection, ensuring that content is assessed by the appropriate model - for instance, Spanish posts are evaluated using a Spanish toxicity model rather than an English one. For sensitive topics like self-harm or terrorism, stricter automated rules might be applied across all languages, even if it means some over-blocking for safety. Additionally, platforms often establish language-specific queues staffed by moderators fluent in the relevant language to handle nuanced cases effectively.

Updating Rules Based on New Data

Moderation rules should evolve continuously. An effective improvement cycle draws insights from incident logs, user reports, moderator feedback, audits, and external factors like legal updates. Metrics such as flag-to-action ratios, reversal rates, and resolution times can highlight whether rules are too lenient or overly strict. Meanwhile, qualitative reviews by senior moderators can identify emerging abuse patterns and tactics. These updates seamlessly integrate into existing workflows, keeping moderation policies relevant and effective.

In high-risk environments, critical rules and keyword lists might require weekly or monthly reviews, while broader policies could be revisited quarterly or biannually. During rapidly changing situations, daily reviews might even be necessary. After major events, structured post-mortems provide valuable insights into what harmful content appeared, what was missed, and where over-moderation occurred. By categorizing incidents - such as player abuse, self-harm content, or misinformation - teams can pinpoint language patterns and refine policies. This might involve adding new slurs or coded phrases, adjusting severity scores, fine-tuning rules for specific groups, or redefining contextual exceptions. Maintaining a change log ensures moderators understand what’s been updated, why, and how it affects their work.

sbb-itb-47c24b3

Real-Time Monitoring and Crisis Management

Real-time monitoring shifts moderation from merely reacting to problems to actively preventing them. When content floods in during live events, product launches, or breaking news, it’s crucial to have systems in place that immediately flag the most harmful material. This approach ensures moderators focus on what matters most. The difference between containing an issue and facing a full-blown crisis often hinges on how quickly high-risk content is identified, escalated, and addressed. Let’s dive into how to design monitoring systems that detect threats early and respond effectively when challenges arise.

Setting Up Monitoring Dashboards

A well-designed dashboard organizes content by severity levels, user reputation signals, and volume trends. Severity levels are determined by AI tools that flag harmful content, such as hate speech, explicit images, or self-harm language. These are often categorized as "Critical", "High", "Medium", or "Low." User reputation adds another layer of context - new accounts posting rapidly are more suspicious than established, verified users. Public figures like athletes, influencers, and journalists often face targeted risks, requiring closer monitoring.

Dashboards should also highlight sudden spikes in abusive comments or direct messages aimed at a specific individual or campaign. These patterns often signal coordinated harassment or brigading. Breaking down content by platform - such as Instagram comments versus direct messages, or live event feeds versus routine posts - helps teams focus on the most vulnerable areas. For instance, during U.S. prime-time broadcasts or playoff games, event-specific filters can help moderators zero in on emerging threats.

Real-time alerts are essential for high-severity issues, like explicit self-harm language, credible threats, or doxxing attempts. Tools like Guardii streamline this process by using AI to assign toxicity scores, detect threatening DMs, and prioritize urgent cases in a central dashboard. For example, Guardii’s 2025 Parent Dashboard Preview showcased metrics such as "7 Threats Blocked" and a "100% Safety Score", offering a clear snapshot of high-risk content alongside actionable alerts. These tools allow safety teams to act swiftly, especially when concerning content like grooming language or organized harassment is detected.

By combining real-time data with automated filters and human oversight, organizations can create a dynamic moderation system that adapts to evolving threats.

Procedures for Handling Crisis Situations

Effective crisis management starts with defining what constitutes a crisis for your organization. This could include coordinated harassment campaigns, credible threats of violence, viral misinformation, or large-scale doxxing incidents. An escalation matrix is key - it maps out severity levels (e.g., Tier 1 through 3) and assigns specific actions to teams like moderation, legal, PR, and security.

- Coordinated attacks or brigading: Dashboards can detect a sudden surge in hostile comments or DMs, often marked by volume spikes, repeated phrasing, or identical hashtags. Immediate steps include tightening content filters, enforcing rate limits, and auto-hiding abusive material. For cases involving threats or doxxing, escalate to legal and communications teams. Pre-prepared brand responses help maintain consistent messaging.

- Self-harm and suicide threats: These require immediate escalation to a designated safety lead. Respond to self-harm posts or messages with supportive, resource-linked replies that direct individuals to crisis hotlines like the 988 Suicide & Crisis Lifeline in the U.S. Avoid punitive actions and follow platform-specific protocols to alert emergency services when necessary. Research, such as Guardii’s 2024 Child Safety Report, underscores the importance of early intervention to prevent escalation.

- Doxxing incidents: When personal information like home addresses, phone numbers, or financial details is exposed, act quickly to remove or hide the content. Document incidents with screenshots and logs, then escalate to legal and security teams. For public figures under your organization’s care, coordinate with internal security and HR to provide protection and support. Keeping detailed records, including timestamps and actions taken, is essential for audits and policy updates.

Protecting Moderator Mental Health

Crisis management isn’t just about addressing external threats - it’s also about safeguarding the mental health of moderators. Those exposed to graphic violence, abuse, or coordinated hate campaigns can suffer serious psychological harm without proper protections in place.

Shift rotations are vital to prevent burnout, allowing moderators to alternate between high-stress and lower-stress tasks. Mandatory breaks, both short and long, should be built into schedules to ensure moderators have time to decompress, especially after reviewing distressing content.

Technology can also help reduce exposure. Features like automatic blurring of graphic images, click-to-reveal options for sensitive content, and audio muting minimize direct interaction with harmful material. Access to mental health resources, such as counseling services, employee assistance programs, and regular debrief sessions, should be standard practice. Training on coping strategies and resilience helps normalize seeking support and requesting task reassignment when needed.

Encouraging a system where moderators can escalate particularly disturbing content for peer review fosters a supportive environment. This not only protects individual well-being but also ensures better decision-making in moderation efforts.

Tracking Performance and Making Improvements

After establishing real-time monitoring and crisis management strategies, the next step is to focus on tracking performance. This is crucial for fine-tuning both automated and human moderation efforts. Without clear benchmarks, it's impossible to determine whether your filters are catching actual threats or simply overwhelming moderators with unnecessary alerts. By monitoring the right metrics, organizations can balance speed, accuracy, and user experience while staying prepared for emerging challenges.

Key Performance Metrics

Performance tracking can be broken down into three main categories: operational speed, decision quality, and community health.

- Operational speed: Measure how quickly your team responds to flagged content, from the moment it's posted to when action is taken. For urgent situations, such as live sports or breaking news, aim for response times under two to five minutes for severe threats like doxxing or credible threats of violence.

- Decision quality: Assess how effectively your system differentiates between legitimate violations and acceptable content. Key indicators include flag accuracy (correctly flagged violations vs. total flagged instances), false positive rates (legitimate content mistakenly flagged), and false negative rates (violations that go unnoticed). Additionally, track the proportion of content resolved by AI versus cases escalated to human moderators - this helps evaluate whether automation is reducing workload or creating bottlenecks.

- Community health: Examine metrics that tie moderation efforts to broader outcomes. These include user report rates (reports per 1,000 posts), repeat offender rates, and sentiment trends over time. For example, if negative sentiment spikes during high-profile events like playoff games, consider tightening filters or increasing staffing during those periods. For organizations safeguarding public figures, such as athletes or journalists, metrics like reductions in targeted harassment and brand safety outcomes are essential, as they directly impact reputation and partnerships.

Once these metrics are in place, regular audits can ensure moderation decisions align with policies and remain consistent.

Auditing Moderation Decisions

Speed is important, but consistency is just as critical. Regular audits help ensure that policies are applied uniformly and that AI systems align with human judgment in nuanced situations. A good starting point is to randomly sample 1–5% of daily moderation decisions across categories like hate speech, self-harm, spam, and direct messages. Supplement this with targeted reviews of content that often falls into gray areas - sarcasm, reclaimed slurs, political commentary, sports-related trash talk, or coded language designed to bypass filters. Senior moderators or quality assurance teams can re-evaluate these cases against written policies to identify inconsistencies.

Weekly calibration sessions are another effective tool. During these sessions, moderators independently review 20–50 borderline cases, share their decisions, and discuss differences as a group. Each decision should be explicitly tied to a specific section of the policy, with discussions documented in a shared log. These insights can guide updates to guidelines, keyword lists, and training programs - especially for new slang, event-specific phrases, or harassment tactics targeting public figures.

Don’t overlook the appeals process. Track metrics like appeal rate, appeal overturn rate, and average resolution time. A high overturn rate may indicate over-enforcement or unclear rules. To improve fairness, route appeals to a separate review team and require reviewers to examine the full context, including media and user history. For platforms using AI-driven moderation tools like Guardii’s Instagram comment moderation, implement "second-look" workflows to quickly restore content mistakenly hidden, feeding these cases back into AI model updates.

By combining robust audits with a focus on appeals, moderation teams can maintain consistency while staying adaptable to new challenges.

Adapting to New Threats and Requirements

Threats evolve constantly, with bad actors developing new tactics like coded slurs, emoji combinations, and formatting tricks (e.g., adding spaces or substituting numbers for letters) to bypass filters. To stay ahead, maintain a dynamic "new patterns" registry where moderators can log suspicious terms and examples. Use this registry to regularly update keyword filters and retrain AI models.

Pay close attention to false alarms and override rates. If moderators frequently reverse decisions, it may be time to adjust thresholds or refine keyword lists. For high-risk areas like sports, politics, or celebrity harassment, consider leveraging external intelligence from industry groups, NGOs, or U.S. law enforcement to anticipate emerging threats. Test your policies against realistic scenarios, such as coordinated harassment campaigns, doxxing attempts, or mass-targeted slurs.

Regulatory requirements also shift frequently. Assign a Legal or Trust & Safety lead to monitor updates to U.S. laws, such as state privacy regulations, COPPA, and platform-specific policies from companies like Meta, TikTok, and X. Translate these changes into actionable moderation rules, data-retention practices, and user-disclosure procedures. Ensure that all moderation-related data - such as logs, screenshots, and evidence - is securely stored, retained only as long as necessary, and accessible for lawful requests or internal reviews. Conduct quarterly audits with Legal and Compliance teams to confirm that your procedures for handling threats, child safety, and self-harm align with current standards.

Tools like Guardii demonstrate how integrated systems can streamline these processes. For example, Guardii provides audit logs and evidence packs in over 40 languages, helping moderation teams connect their performance to risk management and compliance goals.

How Guardii Handles Real-Time Moderation

Guardii takes on the challenge of real-time moderation with a focus on tailored safety protocols for high-risk accounts like athletes, sports clubs, influencers, journalists, and public figures. These accounts often face a surge of abuse and legal risks, particularly during high-profile events. Unlike standard profanity filters, Guardii uses AI models specifically trained to identify harassment, hate speech, threats, and sexual abuse that are common in sports and celebrity spaces. This includes slurs tied to performance, betting-related abuse, racist attacks on players, and threats targeting families. Guardii offers features such as priority queues, quarantine systems, audit logs, and legal-ready documentation, empowering teams to show accountability to sponsors, leagues, and regulators.

These capabilities are delivered through specialized tools for filtering comments, analyzing direct messages (DMs), and managing evidence.

Instagram Comment Auto-Hide and DM Threat Detection

Guardii's auto-hide feature scans Instagram comments in real time, flagging toxic language, hate speech, threats, self-harm encouragement, and spam. When the AI determines a comment crosses a configured threshold, it’s auto-hidden while remaining accessible for review, evidence, or appeal. Teams can adjust rule sets based on the situation: stricter filters for match days, lighter settings for community campaigns, or maximum control during high-stakes events like playoffs. For example, during critical U.S. events, stricter filters can be applied three to four hours around kickoff and then eased once activity levels return to normal.

In DMs, Guardii identifies harassment, coercion, grooming, unsolicited explicit content, and cyberflashing. Messages are classified by risk level - low (insults), medium (persistent harassment), or high (credible threats or explicit material involving minors) - and directed to Priority or Quarantine queues. This setup allows welfare, security, or legal teams to act according to predefined protocols, such as blocking users, reporting to platforms, escalating to law enforcement, or simply preserving the evidence. Clubs must establish clear escalation rules, like: "Any DM mentioning weapons or doxxing → immediate security review" or "Sexualized content involving under-18 athletes → mandatory safeguarding escalation." These rules should align with league or school district policies to ensure consistency and compliance.

All of this feeds into Guardii's evidence management system, creating a seamless moderation process.

Evidence Packs and Audit Logs

Guardii generates detailed evidence packs that include the original content, metadata (like timestamps and user IDs), classification labels, and a record of actions taken - who reviewed it, when it was hidden or escalated, and why. These packs can be exported for internal reviews, law enforcement investigations, or sponsor audits, showcasing proactive measures to protect athlete mental health and brand integrity. Additionally, audit logs provide a continuous record of moderation decisions, helping compliance officers check for bias, confirm consistent application of policies, and demonstrate to regulators or partners that the organization maintains strong trust-and-safety protocols.

Moderation Across 40+ Languages

Guardii uses multilingual natural language processing (NLP) models to moderate content in over 40 languages, addressing abusive messages that often evade English-only tools. It’s designed to handle code-switching and slang, such as mixing Spanish and English or using regional slang to insult players. It also considers regional context, recognizing that some words may be neutral in one country but offensive in another. To minimize false positives, organizations can provide language and country profiles (e.g., Portuguese for Brazil versus Portugal), collaborate with regional teams to whitelist reclaimed terms or nicknames that might appear offensive out of context, and conduct A/B testing by region during international events to fine-tune settings based on user feedback and logs.

For global events like the World Cup or Olympics, Guardii enables event-specific profiles to expand language coverage and apply stricter thresholds around high-risk matches. Teams can pre-load known slurs, chants, and rival nicknames specific to opponents and regions into custom rule lists and activate time-sensitive stricter settings from two hours before to two hours after kickoff, using the local stadium’s time zone. This ensures comprehensive and adaptive moderation during critical moments.

Conclusion

Real-time moderation plays a critical role in safeguarding users, protecting brand reputation, and nurturing safe online communities. The best results come from a thoughtful combination of clear guidelines, a layered system where AI manages high-volume tasks while human moderators handle complex cases, and adaptable strategies that cater to sensitive topics, major events, or diverse audiences. This approach strikes a balance between speed, accuracy, safety, and user engagement.

To stay ahead of emerging challenges, continuous improvement is essential. As online behaviors and language evolve, organizations must regularly refine their moderation practices. Key metrics like response times, flagged content rates, and false positives should be monitored closely. Regular audits of moderation decisions can uncover gaps and help update policies. Moderators, often the first to spot new trends or tactics, should be actively involved in shaping these updates. This iterative process ensures systems remain effective against issues such as evolving slang or coordinated abuse campaigns.

A strong example of this approach is Guardii, a moderation tool designed to address these challenges. It automatically hides toxic comments, identifies threats in direct messages across 40+ languages, and provides detailed evidence logs. With features like Priority and Quarantine queues and customizable rule sets tailored to specific events or time zones, Guardii ensures scalability and precision during critical moments.

When implemented effectively, real-time moderation does more than minimize harm - it builds trust and encourages ongoing engagement. For brands and public figures, robust moderation programs are tied to brand safety, sponsor confidence, and the protection of individuals who may face heightened abuse during high-profile events. By investing in clear policies, layered systems, and real-time monitoring, organizations can create secure, thriving online spaces.

Success in real-time moderation depends on consistent measurement, regular audits, and adjustments informed by performance data, moderator feedback, and evolving regulations. Companies that prioritize a comprehensive and integrated approach send a clear message: community safety is not an afterthought, but a fundamental commitment. This dedication fosters resilience and equips organizations to navigate the complexities of today’s digital world.

FAQs

How can AI and human oversight work together for effective real-time moderation?

Real-time moderation blends the quick response and scalability of AI with the nuanced judgment of human oversight. AI tools excel at swiftly scanning and identifying harmful or inappropriate content, automatically hiding toxic comments, and isolating questionable material for further evaluation.

This method provides 24/7 protection while giving human moderators the ability to handle complex decisions, striking a balance between efficiency and accuracy in moderation efforts.

What are the essential steps for creating an effective escalation process in real-time moderation?

An effective escalation process in real-time moderation is essential for tackling critical issues promptly and efficiently. Here’s how to ensure your process is up to the task:

- Define escalation criteria: Be specific about what warrants escalation. This could include threats, harassment, or situations involving legal or safety concerns. Clear guidelines help moderators act decisively.

- Establish response tiers: Assign responsibilities based on the severity of the issue. For example, routine matters might stay with moderators, while serious cases go to legal or safety teams. Everyone involved should know their role and how to step in when needed.

- Track and document incidents: Use tools or workflows to log escalated cases. A detailed record ensures proper follow-up and supports audits, helping to refine the process over time.

By putting these steps into action, you can respond quickly to high-risk situations, safeguard individuals, and protect your brand’s reputation.

How can moderation rules be adapted for specific events and audiences?

Platforms can fine-tune moderation rules to suit specific events and audiences by using AI-powered moderation tools that analyze content as it happens. These tools can automatically hide harmful comments, spot threats or harassment in direct messages, and even adjust their sensitivity based on the nature of the event or the demographics of the audience.

On top of that, advanced moderation systems can create comprehensive evidence packs and audit logs, providing essential support for safety, legal, and management teams. This approach helps maintain a balance between protecting users, safeguarding brand reputation, and ensuring community safety.