Hidden Meanings Behind Emojis in Online Abuse

Emojis aren’t just playful icons - they’re often used as a secret code in online abuse, bullying, and criminal activities. From mocking symbols like 🤡 and 🐍 to sexualized combinations like 🍆💦, their meanings shift depending on context, making them hard to detect. Predators, bullies, and even extremist groups exploit this ambiguity to evade detection, leaving victims vulnerable.

Key Takeaways:

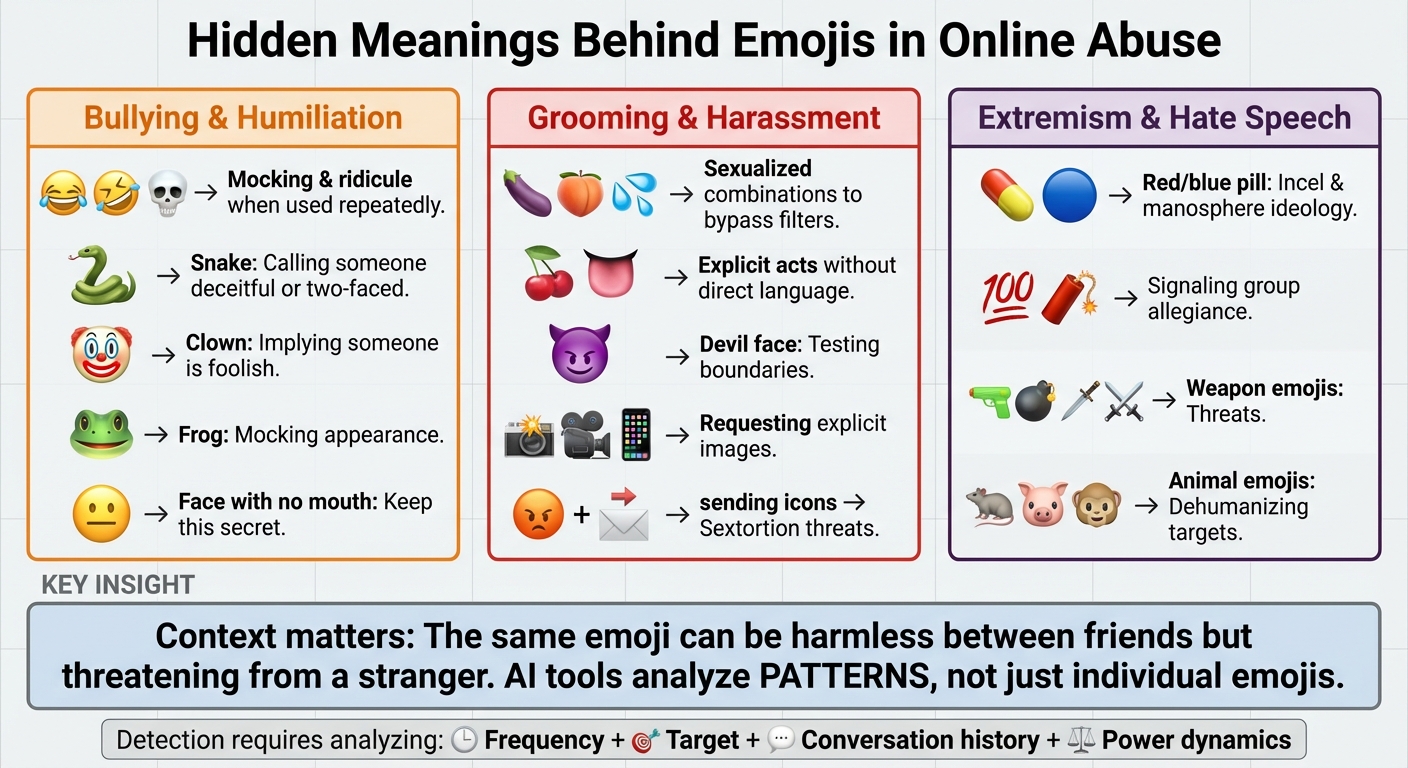

- Bullying: Emojis like 😂 and 💀 can humiliate when used repeatedly under someone’s posts.

- Grooming: Predators use emojis like 🍆, 🍑, and 📷 to bypass filters and pressure victims.

- Extremism: Groups repurpose emojis like 💊 and 🧨 to signal ideologies or threats.

- Detection: AI tools like Guardii analyze patterns, not just individual emojis, to identify risks.

To combat this, platforms need context-aware moderation tools that flag harmful patterns, protect users, and provide evidence for legal action. Emojis alone rarely tell the full story - it’s the patterns and context that reveal abuse.

Hidden Meanings of Emojis in Online Abuse: Bullying, Grooming, and Extremism

How Emojis Function as Coded Language in Online Abuse

Why do abusers use emojis in online interactions?

Abusers often turn to emojis because these symbols can slip past basic keyword filters and manual reviews. While platforms might automatically flag explicit language or terms, emojis like 🍑, 🍆, 💦, or 🔪 can carry sexualized or threatening meanings without setting off alerts.

Emojis also provide a layer of plausible deniability. If confronted, the sender can brush off their use as "just playful", even when their intended audience - whether peers or a specific group - understands the harmful undertone. This allows the abuser to sidestep accountability while leaving the victim to endure ongoing ridicule or intimidation.

Their simplicity is another advantage. Emojis enable quick, repeated harassment, whether through taunts, sexualized messages, or threats that transcend language barriers [2]. In extremist or hate-filled spaces, emojis can act as subtle markers of group identity or ideological alignment, avoiding explicit language that could draw attention. For example, the 💊 emoji, once a pop culture reference, has taken on new meaning in some online communities, signaling misogynistic or extremist views.

These strategies highlight how the context in which emojis are used can drastically change their meaning.

How do emoji meanings shift across different contexts?

The meaning of an emoji can change entirely depending on the situation. A heart ❤️ or fire 🔥 emoji might feel warm and encouraging when shared between friends, but the same symbols from a stranger - or worse, a harasser - can be interpreted as intrusive or even sexualized.

Different groups and subcultures often assign new, sometimes harmful, meanings to emojis. What appears harmless in a general emoji guide may carry a specific, coded message within certain communities. For instance, teens might use emojis as slang, with meanings that evolve rapidly. A symbol like 🐸 (frog) might be used to mock someone's appearance, while 😶 (face with no mouth) could signal "keep this secret" in situations involving bullying, sexual abuse, or even illegal activity.

In abusive relationships, emojis can take on even darker roles. A pre-arranged emoji might serve as a veiled threat, such as "comply or I'll leak your photos" in cases of sextortion. These meanings depend on the context - conversation history, prior incidents, and power dynamics all play a role in how the emoji is interpreted [2].

Because these meanings evolve so quickly, relying on static lists of "bad emojis" is ineffective. Safety teams need to analyze how emojis are being used in real conversations and across different communities over time to identify potential risks.

Emoji Patterns in Bullying, Harassment, and Exploitation

Emojis Used for Bullying and Humiliation

Emojis have become a subtle yet powerful tool for online bullying, allowing users to mock or belittle others without using explicit language. For instance, emojis like Face with Tears of Joy (😂), Rolling on the Floor Laughing (🤣), and Skull (💀) are often posted alongside someone’s content to suggest it’s laughable or unworthy of respect. When these emojis are used in a coordinated manner - such as flooding a post with multiple 😂 or 💀 - the ridicule becomes even more public and intense.

Animal emojis also carry coded insults. The Snake (🐍) is commonly used to brand someone as deceitful or two-faced, particularly after drama involving friendships or relationships. The Clown (🤡) implies that someone is foolish or "a joke." Meanwhile, the Frog (🐸) can be used to mock someone’s appearance, and the Face with No Mouth (😶) often signals that a secret or comment should be kept hidden, adding an element of exclusion or secrecy.

Sexualized Emojis in Harassment and Grooming

In cases of online harassment and grooming, emojis are often used to bypass content filters and communicate sexual intent. Common examples include the Eggplant (🍆), Peach (🍑), Cherries (🍒), and Sweat Droplets (💦). These symbols are frequently combined - like 🍆💦 or 🍑👅 - to imply explicit acts without using direct language. The Australian Centre to Counter Child Exploitation notes that even seemingly unrelated emojis, like the Angry Face (😡), can serve as subtle indicators of a child being at risk.

Groomers often escalate their tactics gradually. They may start with friendly or playful emojis like 😊, ✨, or 💖, easing into more suggestive combinations over time - such as pairing Devil Face (😈) with 🍆 and 💦 - to test boundaries. Emojis like cameras (📷, 📹) or phones (📱) are frequently used to request or pressure victims into sharing explicit images. In sextortion cases, threatening emojis, such as 😡 or 😈, are paired with sending icons to intimidate victims. Law enforcement agencies have reported a growing trend of online child exploitation involving these coded messages.

Emojis in Hate Speech and Extremist Groups

Emojis have also found a place in hate speech and extremist circles, where they are repurposed to promote radical ideologies. Symbols like the Red Pill (💊) and Blue Pill (🔵) are linked to incel and manosphere communities, while icons like 100 (💯) and Dynamite (🧨) are used to signal allegiance or reinforce group narratives. Weapon-related emojis, such as Gun (🔫), Bomb (💣), Dagger (🗡️), and Crossed Swords (⚔️), often carry threatening undertones, especially when paired with identifiers of specific groups. Additionally, animal emojis like Rat (🐀), Pig (🐷), and Monkey (🐒) are employed to dehumanize and demean targeted communities.

Researchers at the University of Bath highlight that while emojis themselves are not responsible for radicalization, they act as visible markers of deeper involvement in harmful ideologies. Keeping track of these evolving uses is critical for understanding and addressing potential threats.

Why Context Matters in Emoji Interpretation

How do subcultures change emoji meanings?

Online communities - ranging from teenagers to extremist groups - often repurpose seemingly neutral emojis into coded language that outsiders find nearly impossible to interpret. What might look like a harmless symbol can carry hidden meanings within specific peer networks. According to the National Cybersecurity Alliance, "what looks like a cute, harmless text symbol could mean something entirely different to your kids", including threats, sexual propositions, racist remarks, or drug-related communications. Bullies, for instance, may "hide behind the original meaning" of an emoji when confronted.

Teens and niche groups are particularly quick to create and redefine these coded meanings, using platforms like TikTok, Instagram, Discord, and gaming chats to spread their updates. These changes often happen so fast that adults, educators, and even institutions struggle to keep up. For example, emojis like 💊, 🍁, and 🔮 have become part of a "drug code" commonly used in illicit markets to advertise substances. Child-safety organizations, which interact with thousands of students annually, track these evolving meanings based on their widespread usage among youth.

Because these codes are shared within tight-knit peer networks, outsiders - whether parents, teachers, or moderators - are often left in the dark unless they gain insight directly from the communities involved. Extremist and criminal groups, in particular, intentionally adapt their emoji codes to evade detection, switching to more obscure symbols or combinations once their earlier codes are exposed.

This ever-changing landscape shows why focusing on single emojis without their context can fail to reveal harmful intent.

Why analyzing single emojis is insufficient for risk assessment

The fluid nature of emoji meanings within subcultures makes it clear that assessing risk requires more than simply isolating individual symbols. Relying on single-emoji "cheat sheets" often leads to misinterpretations, as they overlook the nuances of context, evolution, and ambiguity. While guides may list potential hidden meanings, the same emoji labeled as "threatening" or "sexual" might be used by most people in its literal or playful sense. Overreacting to every instance can overwhelm safety teams, creating unnecessary noise [2].

Harmful intent becomes evident in patterns rather than isolated symbols. For example, repeatedly using mocking emojis under someone's posts could signal ongoing humiliation, even if no explicit slurs are present. Similarly, a progression from neutral reactions to increasingly aggressive or suggestive emoji combinations might indicate grooming, radicalization, or escalating hostility - often before any explicit language appears. The Australian Federal Police’s child-exploitation unit has noted that even something as simple as an angry face emoji could, in some cases, signal manipulation of a child. However, they caution that "in most cases it is probably nothing to worry about", emphasizing the importance of understanding the broader context [2].

To accurately assess risks, it’s essential to look at emojis in conjunction with factors like message tone, frequency, target, and known risk indicators. Social dynamics also play a critical role. For instance, friends might exchange "threatening" emojis as part of dark humor, fully aware of the joke. But when the same emoji comes from an online stranger - or worse, an adult toward a minor - it takes on a much more concerning meaning. Scholars have pointed out that emojis "don't cause radicalisation", and focusing too much on decoding them can distract from analyzing the actual content and relationships involved.

This complexity highlights the need for advanced tools that can analyze contextual and relational data to identify genuine threats effectively.

sbb-itb-47c24b3

AI Moderation and Emoji-Based Risk Detection

How AI tools detect risky emoji patterns

Modern AI moderation tools treat emojis as more than just fun symbols - they see them as important signals that can indicate risky behavior. These systems use advanced language models that analyze emojis alongside words and hashtags, learning their meanings in different contexts from massive datasets of real conversations. By combining machine learning with rule-based patterns, these tools can flag high-risk combinations, like emojis used to represent drugs or repeated sexual symbols. They also look at behavioral clues, such as how often emojis are used, who is sending them, and what phrases accompany them, to differentiate between jokes and actual threats.

Machine learning helps catch subtle and evolving patterns, adapting to new ways people use coded language. At the same time, it minimizes false alarms in harmless situations. For instance, the system might consider factors like sudden spikes in abusive emoji use aimed at one person, adults messaging minors, or phrases like "this will go viral." By examining account histories and whether the communication happens in public comments or private messages, the AI can better identify genuine risks.

Practical workflows for safety teams

Once risky behavior is detected, safety teams rely on structured workflows to act on these insights.

AI moderation systems apply different levels of response depending on the content's risk level. For low-risk cases, such as ambiguous emoji use, the content is logged for later review. If the content suggests bullying or inappropriate innuendo, it may be automatically hidden until a moderator can review it. High-risk cases, like explicit threats or grooming behaviors, are hidden immediately and flagged for detailed review by safety or legal teams. For severe direct messages, the system may quarantine the message, blur its content, and generate a detailed case record that includes timestamps, user IDs, conversation context, and the rules that were triggered.

These records are then sent to the appropriate team. For example:

- Wellbeing or safeguarding teams: Handle support outreach for affected individuals.

- Legal or compliance teams: Assess evidence for potential legal action.

- Security teams: Manage credible threats that require escalation.

Moderators review the flagged content, examine conversation histories, and check prior reports to quickly decide whether to restore content, maintain the action taken, or escalate the case further.

Evidence packs are a key part of these workflows. They provide a clear and detailed record of what happened, how often it occurred, and why it’s important. A strong evidence pack includes:

- Raw content (screenshots and text exports with emojis and timestamps)

- Contextual explanations (what specific emojis mean in risky contexts like grooming or drug use)

- Pattern summaries (frequency and escalation timelines)

- System logs (rules triggered and actions taken)

- Access details (who reviewed or exported the data)

This documentation supports internal investigations and ensures compliance with external regulators or U.S. law enforcement when necessary.

How Guardii protects athletes and creators

Guardii takes these workflows and applies them to safeguard athletes, influencers, and content creators in real-time.

Guardii provides emoji-aware moderation for platforms like Instagram, monitoring comments and direct messages to automatically hide harmful content. It identifies toxic combinations of emojis, slurs, and threats while staying compliant with Meta’s policies. The system detects grooming and harassment across more than 40 languages, prioritizing or quarantining risky content for review. Detailed evidence packs - including conversation logs, timestamps, and user IDs - help with internal reviews and legal actions. Real-time alerts via Slack, Teams, or email, along with watchlists for repeat offenders, ensure athletes and creators stay protected from harmful interactions.

How do you tackle hate speech one emoji at a time?

Conclusion

Emojis have evolved far beyond simple expressions of emotion - they now carry the potential for harm, including threats, sexual propositions, bullying, and extremist messaging. Abusers often pair emojis with minimal text to create messages that appear innocent on the surface but convey a clear, harmful intent to the recipient. Since subcultures, extremist groups, and offenders constantly assign new, specialized meanings to common emojis, there’s no universal "emoji dictionary" that can keep up with these shifting codes.

To address this challenge, risk assessment must focus on contextual patterns rather than isolated symbols. A single emoji rarely signals abuse. Instead, repeated patterns - like the consistent use of demeaning icons, escalating sexualized imagery in a minor's messages, or sudden tone shifts within an ongoing conversation - are more telling. Static keyword lists can't keep up with evolving misuse, which is why AI-powered, context-aware moderation tools are now indispensable for protecting individuals and organizations.

For U.S. athletes, influencers, and creators, emoji-based abuse poses risks like emotional distress, reputational damage, and even safety threats. These issues can erode public trust and deter sponsorships. Tools like Guardii offer a proactive solution by automating detection and evidence collection. Guardii moderates Instagram comments and direct messages in over 40 languages, identifying harmful emoji combinations and toxic messages. It automatically hides harmful content, flags high-risk messages for review, and generates evidence packs and audit logs to support legal and safeguarding efforts when necessary.

The objective isn’t to monitor every message or ban emojis entirely. Instead, it’s about recognizing persistent patterns of harm - whether they’re demeaning, sexually exploitative (especially toward minors), threatening, or linked to extremist ideologies. A balanced approach that combines open dialogue, cultural awareness, and proportionate use of AI tools is far more effective than blanket monitoring or overreaction.

Addressing emoji-based abuse requires prioritizing context over knee-jerk responses. By analyzing misuse patterns and leveraging real-time AI moderation, organizations can implement targeted safety measures that protect individuals and maintain trust. Combining these tools with clear escalation protocols ensures a safer, more secure environment for everyone.

FAQs

How can parents recognize if their child is being targeted with harmful emoji messages?

Parents can identify potential concerns by paying attention to unusual or repetitive emoji patterns in their child’s messages. These patterns might be used to hide threats, bullying, or grooming attempts that aren't immediately clear.

AI tools such as Guardii can assist by analyzing and flagging suspicious messages. This added layer of protection helps uncover hidden signals, alerting parents to possible risks. By doing so, it supports safer online environments and offers families greater peace of mind.

How do AI tools help detect and prevent online abuse involving emojis?

AI tools are incredibly useful when it comes to spotting abusive behavior involving emojis. By examining the context in which emojis appear, these tools can pick up on harmful intentions like harassment, threats, or inappropriate communication. They don’t just look at the emojis in isolation but dig deeper to reveal patterns or meanings that might otherwise go unnoticed.

Once such behavior is identified, these systems can automatically hide or flag the suspicious content, enabling moderation teams to respond quickly. Additionally, they generate detailed evidence logs, which can play a key role in ensuring safety, aiding legal actions, and safeguarding both individuals and brands.

Why does context matter when understanding the meaning of emojis in online interactions?

Context plays a crucial role in understanding emojis because their meaning can shift depending on the situation, cultural subtleties, or the tone of a conversation. An emoji that's playful in one scenario might carry a completely different, even harmful, connotation in another.

Grasping the context helps distinguish between lighthearted interactions and messages that could be harmful. This is particularly important in online spaces, where emojis often serve as nuanced tools for expressing feelings - or, sadly, as a way to mask abusive intentions.