Guide to building real-time moderation: clear rules, AI + human layers, escalation tiers, event-specific settings, multilingual support, and crisis protocols.

Automated real-time capture secures disappearing online abuse with timestamps, metadata, and tamper-proof logs to support safety teams, legal cases, and brand protection.

Multilingual AI detects and auto-hides abusive comments and high-risk DMs across 40+ languages to improve user safety and protect reputations.

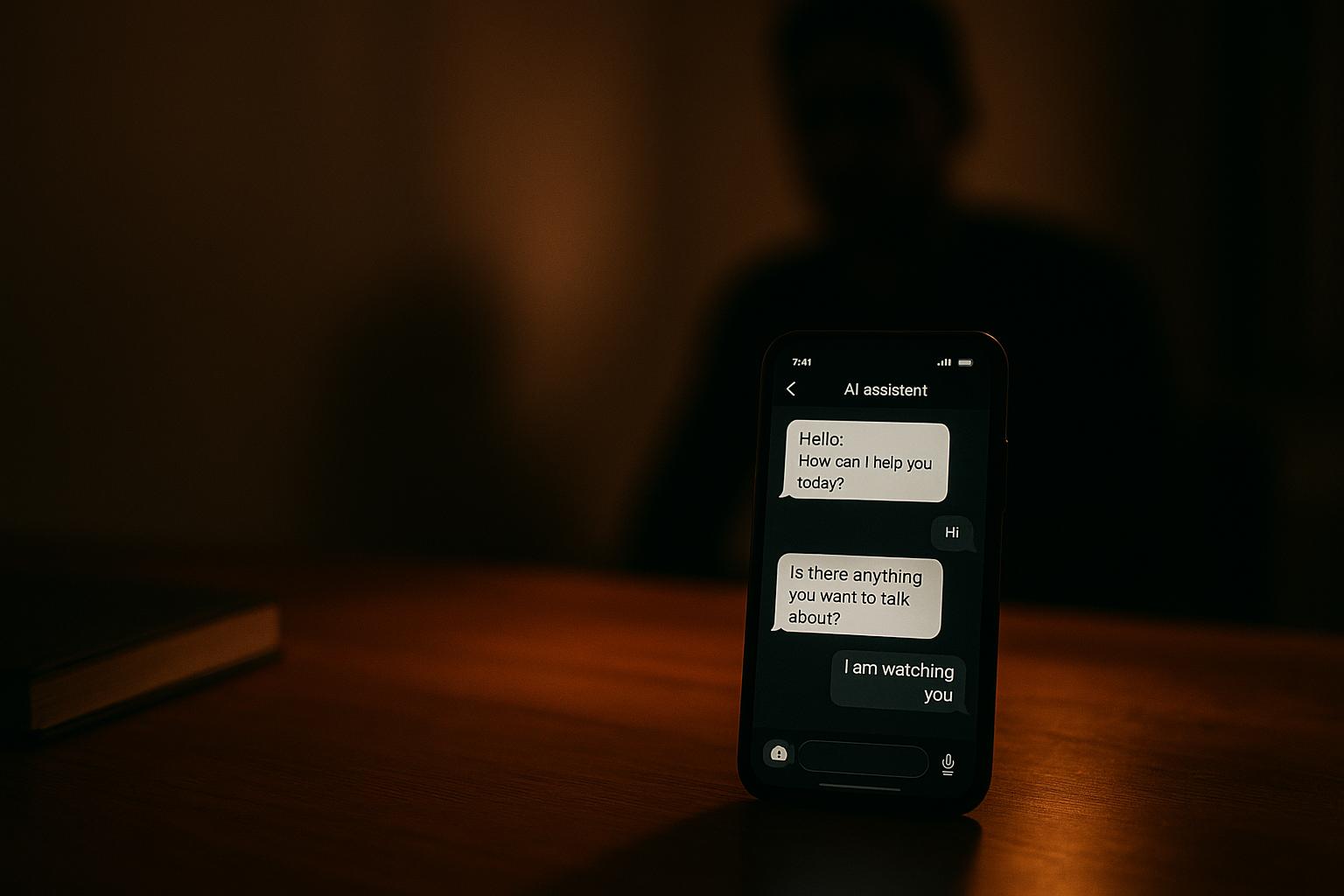

AI tracks conversation trajectories to spot grooming and protect users by flagging risky DMs, hiding harmful content, and compiling legal-ready evidence.

How regional language, slang and cultural norms affect AI moderation—and how localized models plus human review reduce false positives and missed threats.

Breaks down privacy, bias, and breach risks of biometric age checks and recommends on-device processing, cryptographic proofs, and data-minimizing designs.

Overview of U.S. child protection laws, mandatory reporter duties, reporting timelines, documentation standards, state registries, confidentiality, and compliance.

Checklist for building, validating, and deploying predictive models to detect online grooming, sextortion, and harassment while ensuring fairness and privacy.

Predators use AI—deepfakes, chatbots and mass targeting—to groom, blackmail and scale child exploitation, straining law enforcement and demanding better detection.

How AI uses NLP, machine learning, and computer vision to detect harassment across text, images, and DMs with real-time alerts, multilingual support, and evidence packs.