How Predators Use AI to Target Victims

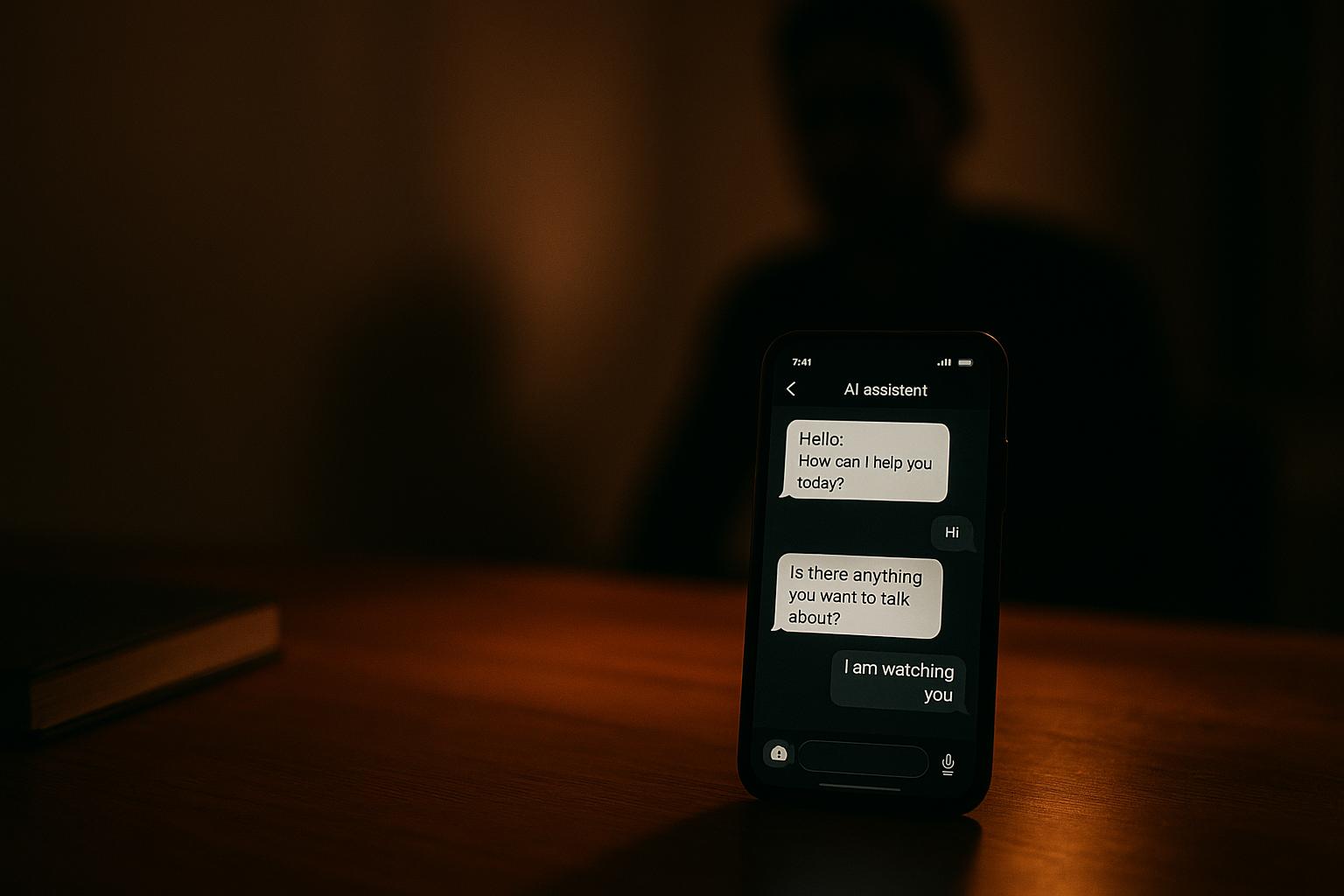

Predators are exploiting AI to target children online, creating new challenges for safety and law enforcement.

AI tools now enable offenders to manipulate innocent photos into explicit material, automate grooming through chatbots, and generate synthetic images that mimic real abuse. This has led to a surge in AI-related exploitation cases, with reports skyrocketing from 6,835 to 440,419 in just six months in the U.S. alone. Girls account for 94% of victims, and infants are increasingly targeted.

Key points:

- AI manipulation: Innocent photos are altered into explicit images, often used for blackmail.

- Chatbots: Automate grooming, allowing predators to target multiple victims at once.

- Synthetic images: AI generates realistic fake abuse material, complicating investigations.

- Law enforcement struggles: Only 12% of cases lead to prosecution due to overwhelming content volumes.

Efforts to combat this crisis include tools like Guardii, which uses AI to detect threats in real time, moderate harmful content, and provide evidence for legal action. However, education, stricter regulations, and collaboration between tech companies and child protection organizations are critical to addressing this growing threat.

How predators use AI, social media to target teens with sextortion scams

AI Technologies Used by Predators

Advancements in AI have provided predators with tools that make creating harmful content and targeting victims easier than ever. These technologies fall into three primary categories, each presenting unique dangers to children's safety online.

Deepfakes and Synthetic Images

AI-driven image manipulation has drastically changed how predators create child sexual abuse material. Offenders can take innocent photos from social media or community pages and alter them into explicit images using generative AI, or even produce entirely synthetic depictions of children who don’t exist. These creations are often so realistic that they’re nearly impossible to distinguish from genuine abuse material, complicating efforts to detect and remove them.

What makes this even more disturbing is that some AI models have been trained on existing child sexual abuse material. This allows offenders to generate new depictions of previously exploited children, effectively re-victimizing them. In some cases, children themselves have used AI to create explicit images of their peers, introducing a new layer of peer-to-peer exploitation.

Once created, these manipulated images are often used for blackmail. Predators threaten to distribute the content unless their demands are met, shifting tactics to rely less on direct coercion and more on technology-enabled manipulation.

AI Chatbots for Grooming and Deception

AI-powered chatbots are another tool that predators are using to refine their tactics. These chatbots can simulate explicit conversations with children and even guide offenders on how to groom or exploit victims. By automating interactions, predators can engage with multiple targets at once, personalizing their approach based on each victim’s online behavior and responses. AI-driven analysis of behavioral patterns allows predators to build detailed profiles, helping them identify and exploit vulnerabilities.

These chatbots are also adept at creating fake identities that are alarmingly realistic. By mimicking human behavior, they can trick children into believing they’re interacting with actual peers. This makes it increasingly difficult for young users to differentiate between real people and AI-generated personas. Research highlights the urgent need for tools to distinguish minors from adults pretending to be children online - a challenge made even harder by AI’s ability to craft convincing child-like identities.

The scale of the problem is staggering. Since 2020, online grooming cases have surged by over 400%, and sextortion cases have climbed by more than 250%. Financial sextortion, often targeting teenage boys, is being increasingly automated through AI, adding another layer of complexity to the issue. Reports to the National Center for Missing & Exploited Children jumped by 149% between 2022 and 2023, underscoring the growing scope of the crisis.

Automated Targeting at Scale

Perhaps the most alarming capability of AI is its ability to scale predatory behavior. Traditional methods of targeting victims were limited by time and resources, but AI has eliminated these barriers. With automated personalization and targeting, predators can now reach far more victims than ever before. Additionally, the speed at which AI can generate harmful content makes it incredibly difficult for child protection organizations to intervene in time.

The statistics paint a grim picture. One in seven children encounters unwanted contact from strangers online, often through direct messages. Around 80% of grooming cases begin on social media platforms before moving to private messaging apps, where predators operate with even less oversight. Alarmingly, only 10% to 20% of these incidents are reported, meaning the true scale of the issue is likely much larger. Law enforcement agencies are overwhelmed, with only 12% of reported cases leading to prosecution. The sheer volume of incidents, coupled with the complexity of investigating AI-generated content, makes it difficult for authorities to keep up. Cross-border operations by predators further complicate enforcement efforts.

"The research clearly shows that preventative measures are critical. By the time law enforcement gets involved, the damage has often already been done." – Guardii's 2024 Child Safety Report

The COVID-19 pandemic has only amplified these challenges. Online exploitation increased by 70% during and after the crisis as children spent more time online. Predators adapted quickly, taking advantage of the uptick in digital activity. Current trends suggest that global online grooming cases will continue to rise through 2025. These developments highlight the pressing need for effective detection and intervention strategies.

Detection and Prevention Methods

As predators become more advanced in their use of AI, the tools to counter them are evolving just as quickly. Detection systems now rely on cutting-edge machine learning to identify threats in real time across multiple languages, aiming to strike a balance between accuracy and minimizing false alarms.

Machine Learning for Threat Detection

Modern detection systems focus on analyzing language patterns and unusual behaviors, such as sudden spikes in messaging activity or interactions at odd hours, to identify potential threats. They’re even capable of spotting when adults attempt to impersonate children in online spaces - a common tactic used to build trust with victims.

These systems go beyond surface-level analysis by tracking patterns that indicate grooming behaviors. For example, they monitor abrupt changes in communication frequency, irregular contact timings, and other anomalies that signal predatory intent. Machine learning models also scrutinize conversations to determine if they show signs of exploitation attempts.

The scale of the problem is staggering. Over just six months, reports of child sexual exploitation linked to generative AI rose from 6,835 to an alarming 440,419 cases. To combat this surge, law enforcement and child safety organizations are using AI tools to match victims and offenders in abuse material through facial recognition, voice analysis, and digital pattern matching.

These advancements are paving the way for platforms like Guardii, which can intervene in real time to stop predators in their tracks.

Guardii's Protection Platform

Guardii.ai offers a robust AI-powered protection system tailored for moderating Instagram comments and direct messages. With support for over 40 languages, the platform is designed to serve a global audience - an essential feature since predators often operate across borders.

Unlike basic keyword filters, Guardii uses advanced AI to analyze the context of conversations in real time. This means it can distinguish between harmless exchanges and genuinely concerning interactions, significantly reducing false alarms. This contextual understanding is crucial because predators often build trust through seemingly innocent communication before escalating their behavior.

When a potential threat is detected, Guardii removes the harmful content from the child’s view and quarantines it for further review. The platform provides continuous monitoring, organizing flagged content into Priority and Quarantine queues. This ensures that severe threats - like explicit solicitations or blackmail - get immediate attention.

Guardii isn’t just for families; it also helps safeguard public figures like athletes, influencers, and journalists by auto-hiding toxic comments, aligning with Meta’s policies. Additionally, the platform creates detailed evidence packs and audit logs, which are invaluable for legal teams when pursuing action against offenders.

As of December 2025, Guardii has a waitlist of 1,107 families and actively protects 2,657 children across 14 countries, with reported 100% effectiveness. Its continuous learning system adapts to new tactics, staying one step ahead as predators evolve their methods.

"The research clearly shows that preventative measures are critical. By the time law enforcement gets involved, the damage has often already been done." – Guardii's 2024 Child Safety Report

But technology alone isn’t enough. Educating families and children through AI simulations adds another critical layer of defense.

Training Programs Using AI Simulations

Detection systems are powerful, but education is just as important. AI chatbots are now being used in training programs to simulate predator interactions in controlled environments, helping children and families recognize manipulation tactics before they encounter them online.

These simulations recreate common grooming behaviors, such as excessive flattery, requests for personal information, attempts to isolate a child from their support network, and the gradual introduction of inappropriate content. By experiencing these scenarios in a safe setting, children learn to identify red flags and differentiate between harmless and potentially dangerous interactions.

Parents and educators also benefit from these programs. AI-driven simulations help them understand how offenders exploit technology, enabling them to better guide and protect their children. These training tools provide practical insights into how predators operate, making it easier to spot warning signs in real-world interactions.

Together, these strategies - detection, prevention, and education - create a comprehensive defense against the growing threat of AI-enabled predatory behavior. By combining cutting-edge technology with awareness training, we can better protect children and empower families to navigate the digital world safely.

sbb-itb-47c24b3

Legal and Industry Responses

The surge in AI-generated child sexual abuse material has spurred swift action from governments, law enforcement agencies, and tech companies around the globe. Reports reveal a troubling rise in these cases, prompting measures such as new legislation, international enforcement operations, and enhanced collaboration among key stakeholders.

Law Enforcement and Regulatory Actions

The United Kingdom has introduced groundbreaking legislation aimed at addressing AI-generated child sexual abuse material at its origin. This law empowers officials to designate AI developers and child protection organizations as authorized testers. These groups can examine AI models to ensure safeguards are in place to prevent the creation or spread of illegal content, such as explicit images and videos involving children.

Additionally, the UK government is forming a specialized group of experts in AI and child safety. This team will design protective measures, safeguard sensitive data, minimize the risk of illegal content leaks, and provide support to researchers involved in this critical work.

The National Center for Missing and Exploited Children (NCMEC) began tracking generative AI in 2023, uncovering a sharp increase in cases of child exploitation. Predators are now using AI tools to create explicit images by manipulating publicly available photos of children, which are then weaponized for blackmail.

On an international scale, enforcement efforts are intensifying. Europol recently coordinated a large-scale operation involving authorities from 19 countries, resulting in 25 arrests tied to AI-generated child sexual exploitation material.

Legal experts are advocating for updates to traditional child pornography laws to include AI-generated images. They warn that viewing such material - whether real or synthetic - can encourage individuals who have never abused children to do so, with some studies estimating this applies to as many as 41.8% of users. Beyond its creation, AI-generated content poses broader societal risks by normalizing abusive behavior.

This growing issue also presents unique challenges for law enforcement. The sheer volume of AI-generated content, often indistinguishable from real abuse material, makes it harder to identify cases requiring victim rescue. Investigators must also contend with the rapid pace at which AI can produce new material, straining already limited resources.

In addition to government action, technology companies are stepping up to enhance online safety.

Tech Company and Safety Organization Partnerships

AI developers are collaborating with child protection organizations under structured testing frameworks to prevent AI misuse in generating synthetic abuse material. The Internet Watch Foundation plays a key role in these efforts, examining AI models and enforcing necessary safeguards.

Social media platforms are also taking steps to protect minors. Many now include automatic privacy settings for users under 18, such as limiting messaging access and filtering inappropriate content.

Tools like Guardii provide additional support by securely storing suspicious content for legal review and offering user-friendly reporting options for serious threats. Meanwhile, parents, educators, and communities are encouraged to stay alert and engage in conversations with children about online risks. The NCMEC has described the rise in AI-related exploitation as "a wake-up call", urging society to act collectively.

These partnerships emphasize the need for unified standards in AI safety.

Standards for Safe AI Development

As AI continues to advance, legal and industry leaders face the challenge of keeping up with its misuse by predators. While evidence confirms that AI is being exploited for child sexual offenses - such as creating deepfakes and automating cyber-grooming - there’s still a lack of comprehensive studies on the scope of these practices.

This gap highlights the need for ethical guidelines and technical standards. Guardii’s approach to online safety, for example, emphasizes a "Child-Centered Design" philosophy that prioritizes children's digital wellbeing while respecting their autonomy and privacy.

Developers are tasked with creating systems that can accurately detect threats without overwhelming law enforcement with false alarms or missing critical cases. To address this, agencies and child protection organizations are employing advanced AI-based detection tools. These include facial and voice recognition, analysis of website patterns, language monitoring in online chats, and chatbots designed to identify individuals with a potential interest in abuse material. However, the rapid creation of AI-generated content continues to outpace these efforts, complicating the fight against exploitation.

As technology evolves, the urgency to establish comprehensive standards grows. These standards must not only address the technical challenges of AI but also consider the ethical responsibilities tied to its development, ensuring that child safety remains the top priority in every decision.

Conclusion

The Need for Early Action

The numbers paint a chilling picture. AI-generated child sexual abuse reports skyrocketed from 199 in 2024 to 426 in 2025. Meanwhile, reports of generative AI-related exploitation in the U.S. surged from 6,835 to a staggering 440,419 in just six months. This sharp increase highlights a troubling shift in how predators operate. They no longer rely on lengthy grooming processes when they can instantly create synthetic abuse material using publicly available photos from social media, school events, or community postings.

Even more concerning, only 12% of reported cases lead to prosecution. As John Shehan, Vice President at the National Center for Missing & Exploited Children, explains, "Predators don't need to be in the same room. The internet brings them right into a child's bedroom".

The most vulnerable groups face the gravest dangers. Girls account for 94% of AI-generated abuse victims, and the exploitation of infants is on the rise - images of children aged 0–2 years soared from 5 in 2024 to 92 in 2025. With children receiving their first smartphones at an average age of 10 and 1 in 7 experiencing unwanted contact from strangers online, the urgency for action cannot be overstated.

Stephen Balkam, CEO of the Family Online Safety Institute, put it bluntly: "Unfiltered internet is like an unlocked front door. Anyone can walk in". To counteract this growing threat, organizations must adopt proactive and comprehensive monitoring systems. Relying on reactive measures will always leave children vulnerable in a world where AI can produce harmful material at an alarming rate.

Given the evolving tactics of predators, immediate and sophisticated protective measures are not just necessary - they’re critical.

Guardii's Approach to Online Safety

Faced with these alarming trends, combating AI-enabled predatory behavior requires a multi-layered defense strategy that evolves alongside digital threats. Guardii.ai steps into this space with real-time solutions designed to protect users where they are most vulnerable. By moderating Instagram comments and direct messages in over 40 languages, Guardii automatically hides toxic content while staying compliant with Meta’s guidelines. This is particularly crucial as predators increasingly use social media to extract images for deepfake creation or to initiate contact.

Guardii’s platform goes further with advanced direct message threat detection, capable of identifying sexual harassment and blackmail attempts in real time. Its Priority and Quarantine queue systems allow safety teams to focus on the most severe threats first, ensuring that dangerous interactions are addressed quickly, even amidst high volumes of social media activity.

Another critical feature is Guardii’s evidence collection and audit log system. By securely preserving suspicious content and offering user-friendly reporting tools, the platform provides documented records that can support legal action when needed.

This approach is invaluable for sports clubs, athletes, influencers, journalists, and families alike. As Susan McLean, Cyber Safety Expert at Cyber Safety Solutions, aptly noted, "Kids are tech-savvy, but not threat-savvy. They need guidance, not just gadgets". Guardii’s advanced AI tools cut through the noise, enabling safety teams to focus on genuine threats while also safeguarding brand reputation and the well-being of content creators.

The stakes are high. Online grooming cases have risen by more than 400% since 2020, and sextortion cases have increased by over 250% during the same period. To keep pace with these escalating threats, organizations must deploy equally advanced AI-driven protection. Guardii’s solutions provide a much-needed shield in the fight to keep children and vulnerable individuals safe in an increasingly digital world.

FAQs

How can parents protect their children from AI-enabled online predators?

Parents can take proactive steps to protect their children from online predators by using AI-driven tools designed to monitor and manage social media activity. These tools can automatically identify and block harmful content, flag questionable direct messages, and isolate inappropriate material for parental review.

With these technologies in place, families benefit from an added layer of around-the-clock protection against the increasing dangers of online exploitation. This approach not only helps keep children safe but also keeps parents informed and ready to intervene when needed.

What difficulties do law enforcement agencies face when prosecuting cases involving AI-driven exploitation?

Law enforcement is grappling with a host of challenges as they confront AI-driven exploitation. One major issue is how predators are leveraging AI tools to produce highly convincing fake content, like deepfakes or altered images. This makes verifying the authenticity of evidence and proving intent incredibly difficult. On top of that, AI-generated content can spread at lightning speed across multiple platforms, making it harder to trace its origins and hold perpetrators accountable.

Adding to the complexity is the absence of consistent laws and regulations governing AI misuse. Many legal systems are still playing catch-up with the rapid pace of AI advancements, leaving critical gaps that complicate the prosecution of these cases. To tackle these issues effectively, collaboration is key. Tech companies, legal experts, and safety organizations need to work hand-in-hand to close these gaps and create a safer digital environment.

How are predators using AI chatbots to target victims, and what can be done to prevent this?

Predators are finding new ways to exploit AI chatbots, using them to create manipulative messages, mimic trusted individuals, and prey on personal vulnerabilities. These tools enable them to craft convincing, personalized interactions, making it easier to deceive and groom victims online.

To address this growing threat, AI-driven moderation tools offer a powerful line of defense. These tools can automatically identify and block harmful comments, flag inappropriate or threatening messages, and maintain evidence logs to assist legal and safety teams. By utilizing cutting-edge technology, we can take meaningful steps toward safeguarding vulnerable individuals and fostering safer online environments.