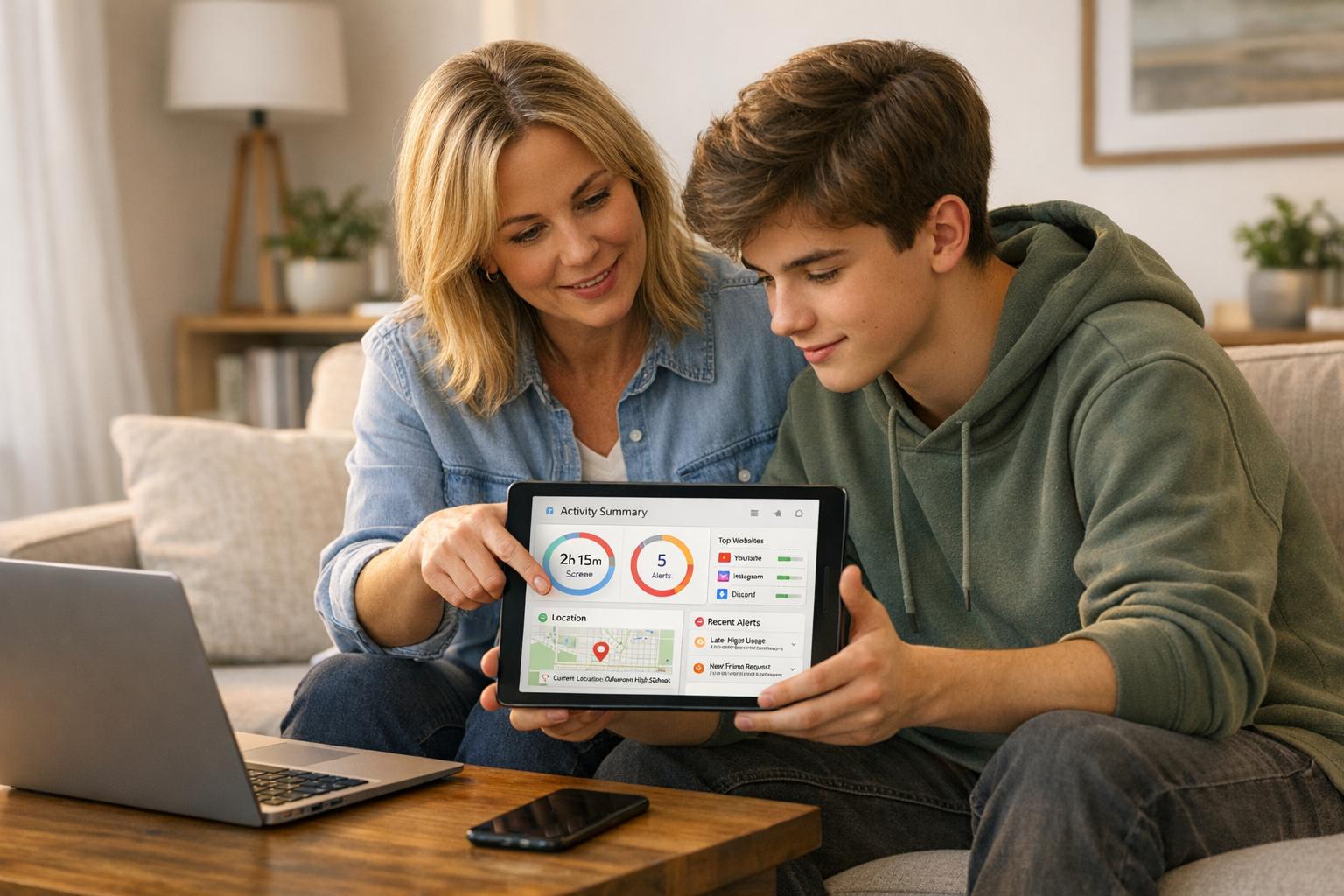

Compare top parental monitoring apps, AI alerts, content filters, and device or network controls to protect kids online while preserving privacy.

How AI moderation detects grooming, cyberbullying, and threats in real time across DMs, comments, and gaming chats—paired with human oversight.

AI tracks conversation trajectories to spot grooming and protect users by flagging risky DMs, hiding harmful content, and compiling legal-ready evidence.

Breaks down privacy, bias, and breach risks of biometric age checks and recommends on-device processing, cryptographic proofs, and data-minimizing designs.

Step-by-step guidance to document evidence, contact authorities first, report safely to platforms, and support victims of online predatory behavior.

Overview of U.S. child protection laws, mandatory reporter duties, reporting timelines, documentation standards, state registries, confidentiality, and compliance.

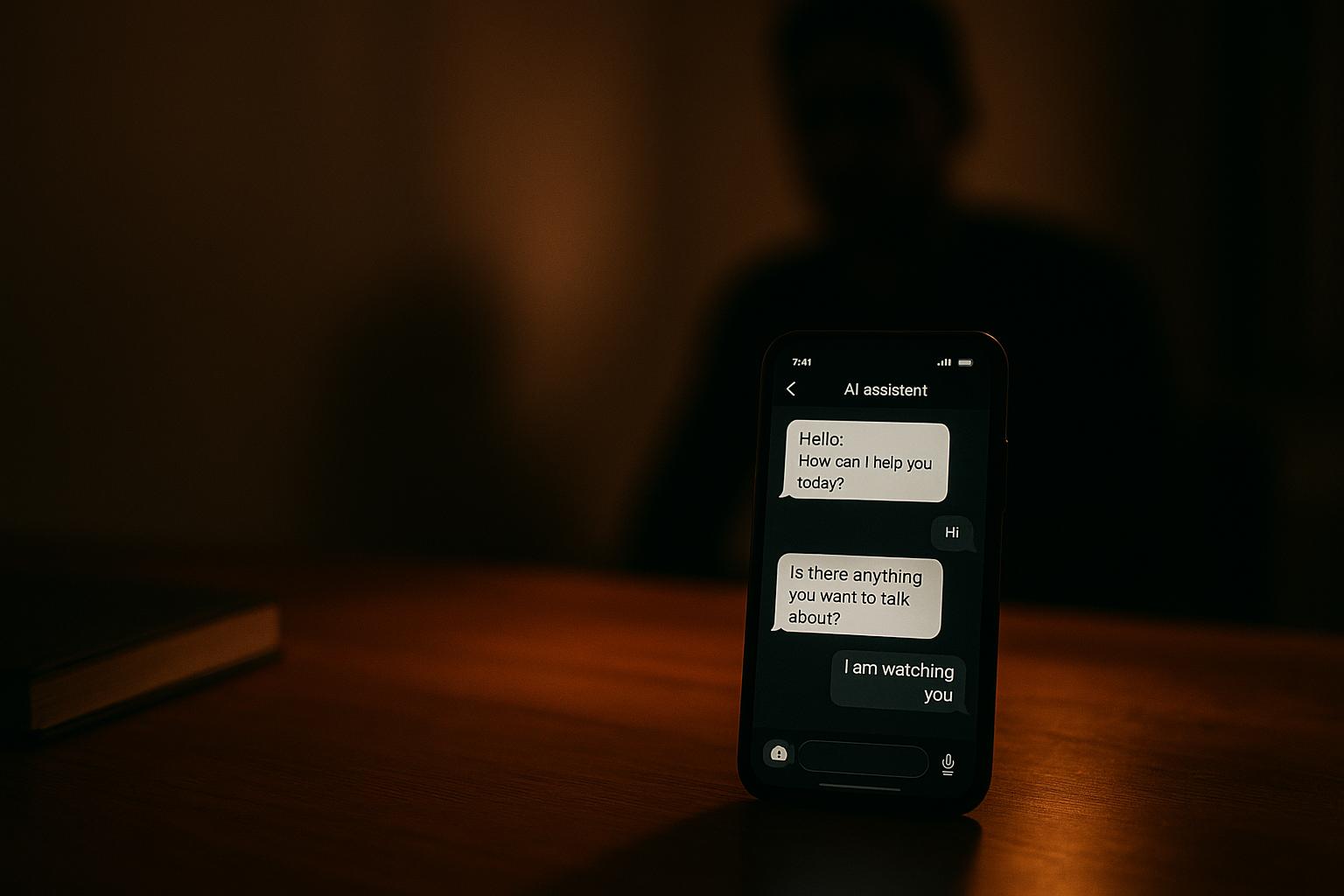

Predators use AI—deepfakes, chatbots and mass targeting—to groom, blackmail and scale child exploitation, straining law enforcement and demanding better detection.

AI is revolutionizing the detection of cyberflashing in direct messages, providing real-time protection against unsolicited explicit content.

AI tools are revolutionizing online safety by detecting predatory behavior in real-time, helping protect children from online threats.

AI platforms are revolutionizing online safety, providing real-time protection against digital threats for children, athletes, and creators.