AI Moderation: Protecting Kids from Online Harassment

Online harassment against kids is escalating rapidly, with threats like grooming, sextortion, and cyberbullying becoming widespread. AI moderation offers a scalable solution to detect and mitigate harmful content in real time, especially in spaces like private messages, gaming chats, and public comment sections. Here's what you need to know:

- Why AI is necessary: Manual moderation can't keep up with the volume of posts and messages. AI analyzes millions of interactions instantly, flagging risks like grooming or explicit content.

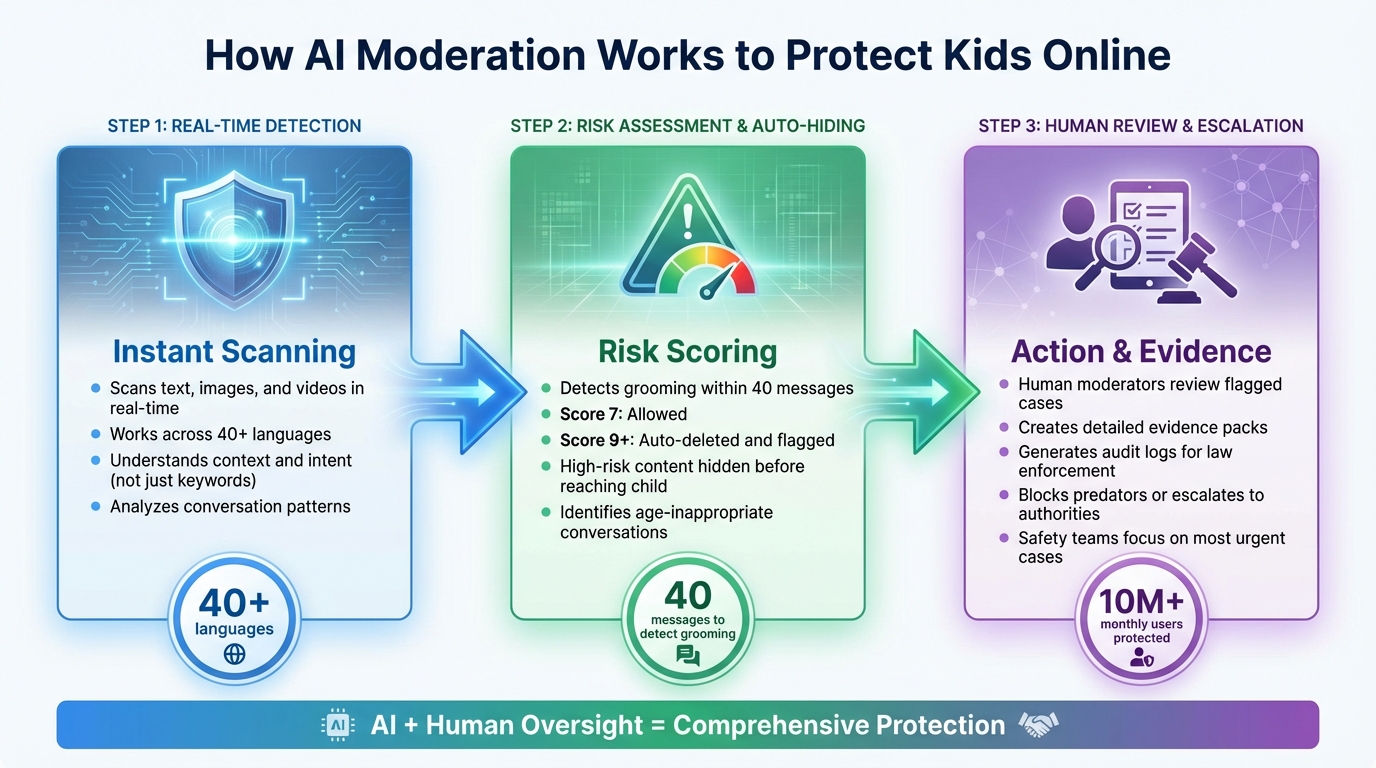

- How it works: AI tools use natural language processing and computer vision to detect harmful patterns, auto-hide content, and alert human moderators for complex cases.

- Key benefits: Platforms like Guardii moderate across 40+ languages, detect grooming within 40 messages, and create evidence packs for law enforcement.

- Challenges: Balancing protection without over-blocking and addressing coded language or evolving tactics from offenders.

To safeguard children online, platforms must pair AI with human oversight, establish clear safety policies, and implement tools like Guardii to monitor public and private interactions effectively. This hybrid approach ensures quick intervention while protecting privacy and maintaining trust.

The Problem: Scale and Complexity of Online Harassment

Where Kids Face Harassment: Public Comments, DMs, and Gaming Platforms

Children face harassment in three main digital spaces: social media comment sections, private messages (DMs), and online gaming platforms. Each of these environments presents unique risks. On public posts, harassment often takes the form of name-calling, slurs, body shaming, and coordinated attacks in comment threads that can quickly spiral out of control and go viral. In gaming, the abuse is often live and immediate, delivered through voice or text chats. Kids may encounter insults, hate speech, doxxing, or even sexual comments, frequently from strangers or older players during matches.

Private messages, however, represent the most dangerous space. These channels allow abuse to become highly targeted, continuous, and hidden from both parents and public scrutiny. Here, children are especially vulnerable to threats like coercion and sexualized abuse. Offenders are constantly adapting their methods, even employing AI to create deepfakes or synthetic abuse, making it harder to detect. A single individual can use bots to mimic a coordinated attack across platforms. This variety of risks highlights the urgent need for scalable and specialized AI tools to address the problem.

Challenges of Volume, Multiple Languages, and Detection Gaps

The sheer volume of posts, comments, and messages - often written in multiple languages and filled with evolving slang - can overwhelm human moderators. Offenders exploit loopholes by using coded language, creative spellings, and symbols to bypass filters.

Most moderation systems today are reactive, relying on user reports. By the time harmful content is flagged, the damage is often done - whether through rapid sharing, screenshots, or escalation. Children may hesitate to report abuse due to fear, shame, or simply because they don’t recognize behaviors like grooming for what they are. Simple keyword filters and limited human oversight are not enough to tackle these challenges effectively.

Hidden Threats in Private Messages and Group Chats

Private messaging and group chats are especially vulnerable to undetected abuse. These spaces give offenders direct, unsupervised access to children, making it easier to groom, manipulate, and coerce without any public witnesses. Groomers often take a slow and calculated approach, building trust, testing boundaries, and escalating from casual conversations to explicit or exploitative requests. This gradual process makes it harder for children to recognize or report the abuse.

AI tools can play a crucial role in spotting grooming patterns in these private spaces. For example, they can detect age-inappropriate conversations, repeated attempts to test boundaries, and escalating sexual content. Without real-time AI scanning, much of this abuse remains hidden until it’s too late. Since parents, educators, and even platform safety teams rarely see this content unless flagged by automated systems, relying solely on manual moderation leaves children dangerously exposed.

How to Protect Kids in a Digital World Online Safety, AI Dangers, and Parental Guidance | Ep 75

The Solution: How AI Moderation Protects Kids

How AI Moderation Protects Children Online: Detection to Action

Real-Time Detection and Auto-Hiding of Harmful Content

AI moderation leverages advanced algorithms to scan text, images, and videos instantly, identifying harmful content before it reaches a child’s screen. When content hits a certain risk level, it’s automatically hidden and flagged for review. These systems work across multiple languages, making them effective in diverse online spaces. Unlike simple keyword filters, modern AI understands context and intent, allowing it to differentiate between casual teen slang and actual threats or hate speech. This quick response not only blocks harmful material but also sets the stage for identifying more severe risks like grooming or harassment.

Detecting Grooming and Sexualized Harassment

AI tools built to detect grooming analyze conversation patterns to spot red flags - such as questions about a child’s age, requests for secrecy, or attempts to move chats to private platforms. Studies show these systems can flag suspicious interactions within the first few dozen messages. Additionally, research highlights their ability to process large volumes of harmful content, including material tied to child sexual abuse, by assessing risky behavior and interactions. Once a risk threshold is reached, the system may take actions like deleting harmful messages, limiting an adult’s ability to contact minors, or alerting human moderators or authorities. These measures allow for early intervention, preventing abuse from escalating.

Guardii's Approach: Complete Moderation for Young Audiences

Guardii takes these detection tools a step further, tailoring its AI moderation specifically for young users. Beyond auto-hiding harmful content, Guardii simplifies alert workflows for safety teams, making it easier to address risks quickly. Designed to protect athletes, influencers, journalists, and families, Guardii automatically moderates Instagram comments and direct messages in over 40 languages. This is particularly valuable for U.S.-based youth athletes and young creators with global audiences. The platform identifies threats and sexualized harassment in direct messages, prioritizing the most severe cases for rapid review by safety teams. For ongoing or extreme incidents, Guardii creates detailed evidence packs and audit logs, which can be shared with legal or law enforcement agencies. This structured approach allows smaller safety teams to focus on the most urgent cases, ensuring that harmful content is stopped before it can harm children.

sbb-itb-47c24b3

Best Practices: How to Implement AI Moderation in Communities

Setting Clear Safety Standards for Youth Programs

To create safer online spaces, organizations need to define clear rules about unacceptable behaviors like grooming language, cyberbullying, sexual harassment, hate speech, and threats. These policies should include specific phrases or patterns to help train AI tools effectively. For example, one AI system trained on 28,000 predator conversations can identify grooming patterns in as few as 40 messages. For U.S. youth programs - whether it's sports teams or creator communities on platforms like Instagram - these standards should align with platform policies and federal child safety laws. By doing so, organizations establish a strong starting point for moderation strategies that can adapt to different online environments.

Balancing Protection with Avoiding Over-Blocking

It’s all about finding the right balance: if AI moderation is too strict, it can block legitimate conversations; if it’s too lenient, it may miss harmful content. A solution is using a graduated risk scoring system. This approach allows high-risk content to be automatically hidden, while medium-risk messages are flagged for human review. For instance, a platform with 10 million monthly youth users uses this method: messages scoring around 7 are allowed, but those scoring 9 or higher are automatically deleted and sent for review. Regular audits are crucial to maintain trust in the system and prevent overburdening it. Additionally, using tools that support over 40 languages ensures inclusivity for diverse U.S. communities.

Combining AI with Human Review and Escalation Protocols

AI alone isn’t enough - human oversight plays a critical role in understanding context and nuance. While AI can process vast amounts of data quickly, human moderators step in to assess flagged interactions. The most effective systems use a tiered escalation process. For example, AI can immediately quarantine high-risk messages and create detailed evidence packs, which are then reviewed by safety teams or shared with law enforcement if necessary. In programs like youth sports clubs that use tools like Guardii, structured alerts allow coaches and safety staff to act quickly. The process is simple: AI detects potential issues, human reviewers confirm the context, and serious cases are escalated through predefined channels. This ensures that predators are blocked or authorities are notified promptly, with all necessary documentation ready for legal or law enforcement teams. Guardii’s structured alerts make it easier for safety teams to respond without delay, ensuring a seamless and effective moderation system.

Conclusion: Building Safer Online Spaces for Children with AI Moderation

The rise in online grooming and sextortion cases highlights a harsh reality: manual moderation alone can’t keep up with the scale and speed of today’s digital threats. AI-powered moderation is now a critical tool for protecting children and teens, offering real-time detection of cyberbullying, predatory behavior, and harmful content. This is especially crucial in private and public channels, where 80% of grooming incidents are reported to begin. The demand for scalable solutions has made AI an indispensable part of safeguarding young users.

AI works best when paired with human oversight in a tiered escalation system. As mentioned earlier, this hybrid approach bridges the gaps left by manual efforts. While AI can process vast amounts of data and spot patterns quickly, human moderators are essential for handling the complex, context-sensitive cases that require deeper judgment.

To make the most of these tools, youth organizations should adopt AI moderation platforms like Guardii. These tools can automatically hide harmful content, flag sexual harassment, and efficiently preserve evidence for safety teams or legal action. For example, Guardii can auto-hide toxic comments and direct messages on Instagram in over 40 languages, detect harassment and threats, and keep detailed records for follow-up actions. Families can also enhance safety by choosing services with transparent AI tools and having open conversations with children about online risks. This combination offers round-the-clock protection and peace of mind.

To create safer digital spaces, organizations need to audit existing gaps in moderation, implement AI solutions across all platforms, and establish clear protocols for hiding harmful content and escalating serious cases. Whether you’re managing a youth sports team, overseeing a creator community, or safeguarding your own children, the risks of ignoring AI moderation - unchecked harassment, grooming, and exploitation - far outweigh the challenges of responsible implementation. By embracing transparent AI tools, we can rebuild trust in online spaces and create a healthier digital environment where U.S. children can connect, learn, and thrive with greater confidence and support.

FAQs

How does AI moderation identify harmful content without blocking harmless interactions?

AI moderation leverages sophisticated models to evaluate the context, patterns, and intent behind online interactions, moving beyond simple keyword detection. By examining the tone and structure of conversations, it can identify harmful behaviors such as bullying, predatory grooming, or sexual harassment, all while permitting normal, friendly exchanges to thrive.

This method reduces both false positives and negatives, ensuring genuine threats are flagged for action while harmless content stays untouched. Its capacity to learn and improve over time makes AI moderation a practical and reliable tool for safeguarding kids and teens in online spaces.

How do AI moderation tools prevent blocking normal conversations?

AI moderation tools aim to create a middle ground between ensuring safety and promoting open communication. By leveraging context-aware algorithms and continuous learning, these tools can distinguish harmful content from regular conversations. They analyze both the intent and tone of messages, helping to flag truly harmful material while minimizing unnecessary restrictions.

To improve precision, many of these tools come with adjustable sensitivity settings and options for real-time review. This allows communities to tailor moderation to their unique needs, ensuring a secure and respectful space for all users.

How can parents use AI tools to protect their children from online harassment?

Parents now have access to AI-powered tools that can play a crucial role in shielding their children from online harassment. These tools are designed to automatically detect harmful content, keeping an eye on private messages and comments for signs of bullying, predatory behavior, or inappropriate language. Many of them can even auto-hide toxic messages, flag concerning activity for review, and notify parents about potential dangers.

What’s more, many of these AI moderation systems offer customizable settings, allowing parents to tailor protections based on their child’s age and maturity level. This helps strike the right balance between ensuring safety and fostering independence. Alongside these tools, having open, honest conversations with kids about online safety and responsible digital habits can further strengthen these efforts.