AI vs. Traditional Parental Controls for Messaging

Keeping kids safe online is harder than ever. Messaging apps expose children to risks like cyberbullying and online predators, with over 60% of kids aged 8 to 12 interacting with strangers online. Parents are torn between two options: AI-driven tools that analyze conversations for threats or traditional controls that rely on manual filters and time limits.

Here’s the bottom line:

- Traditional tools block specific websites or keywords but often miss subtle risks. They require constant updates and can feel rigid or intrusive, potentially straining parent-child trust.

- AI-powered systems go further by analyzing context, detecting patterns, and flagging real threats like grooming or coded bullying. They adapt as kids grow, balancing safety with privacy.

Quick Overview:

- Traditional Controls: Basic filters, manual setup, and limited flexibility.

- AI Tools: Smart monitoring, real-time alerts, and tailored protection.

Choosing the right tool depends on balancing safety, privacy, and trust. Let’s break down the details.

Standard Parental Controls: Features and Challenges

Key Features of Standard Parental Controls

Standard parental controls rely on manual settings and basic filters to help parents manage their children's online activities. These tools typically include a few essential features designed to keep kids safe in the digital space.

One of the main functions is content filtering, which blocks access to inappropriate websites, apps, or specific features. Parents can manually block certain sites or select from pre-configured categories like violence, adult content, or gambling. This forms the foundation of most traditional parental controls.

Usage controls are another common feature, allowing parents to set time limits for internet access or restrict communication with certain contacts. Many tools include scheduling options, so parents can, for example, block social media during homework time or shut down internet access at bedtime.

Monitoring tools track activity, such as websites visited, apps used, and communication logs. This gives parents a way to review their child’s browsing history, app usage, and even interactions on social media platforms.

Major tech companies have integrated these features into their ecosystems. For instance, Apple's Screen Time, Google's Family Link, and Microsoft's Family Safety provide built-in parental control options.

In April 2025, Meta introduced Facebook and Messenger Teen Accounts with built-in restrictions for users aged 13 to 17. These accounts automatically limit posts and Stories to "friends only", restrict chatting to approved connections, filter out age-inappropriate content, and send reminders to log off Facebook after 60 minutes of use per day. While these integrated tools offer convenient oversight, they often fall short in addressing the ever-changing online landscape.

Despite these helpful features, standard parental controls come with notable challenges.

Limitations of Standard Tools

Traditional parental controls often lack the flexibility needed to address the dynamic nature of the internet. Their static design creates vulnerabilities that tech-savvy kids and online predators can exploit. For instance, children can find ways to bypass these controls, and the tools frequently fail to keep up with new apps and platforms. Online games, whether accessed via mobile devices or web browsers, are a prime example - they rarely include built-in parental controls or safety measures.

Another significant drawback is the lack of contextual understanding. These tools are not equipped to recognize nuanced online threats like cyberbullying, grooming, or misinformation shared among peers. For example, a predator using seemingly innocent language to gain a child’s trust can easily evade keyword-based filters. Similarly, subtle forms of cyberbullying, such as coded language or inside jokes, often go unnoticed.

Excessive monitoring can also damage trust between parents and children. Research shows that intrusive surveillance can make kids feel their independence is being stifled. Rachel Ruiz, an Adolescent Mental Health Expert with the AngelQ Advisory Board, explains:

"Most parents feel safer when they're constantly monitoring their child's device, but you risk your instructions becoming background noise by overdoing it."

Over-reliance on strict controls can hinder a child’s ability to learn how to navigate online spaces responsibly. Constant surveillance may even push children to become more secretive, making them less likely to share genuine concerns about online dangers with their parents.

Technical issues are another common problem. Some systems rely on VPNs for content filtering, but these don’t always secure the child’s connection or protect the device’s IP address.

Finally, the manual nature of traditional controls demands significant effort from parents. New apps and platforms often require separate configurations, and overly restrictive settings can block legitimate educational resources or other useful content. This frustration may lead parents - or children - to disable the controls altogether.

The scale of the problem is daunting. With 96% of U.S. teens using the internet daily and 46% online almost constantly, managing online safety is no small task. Add to that the fact that 95% of teens have access to smartphones, 90% to computers, and 83% to gaming consoles, and it becomes clear that manual parental controls are increasingly difficult to maintain effectively.

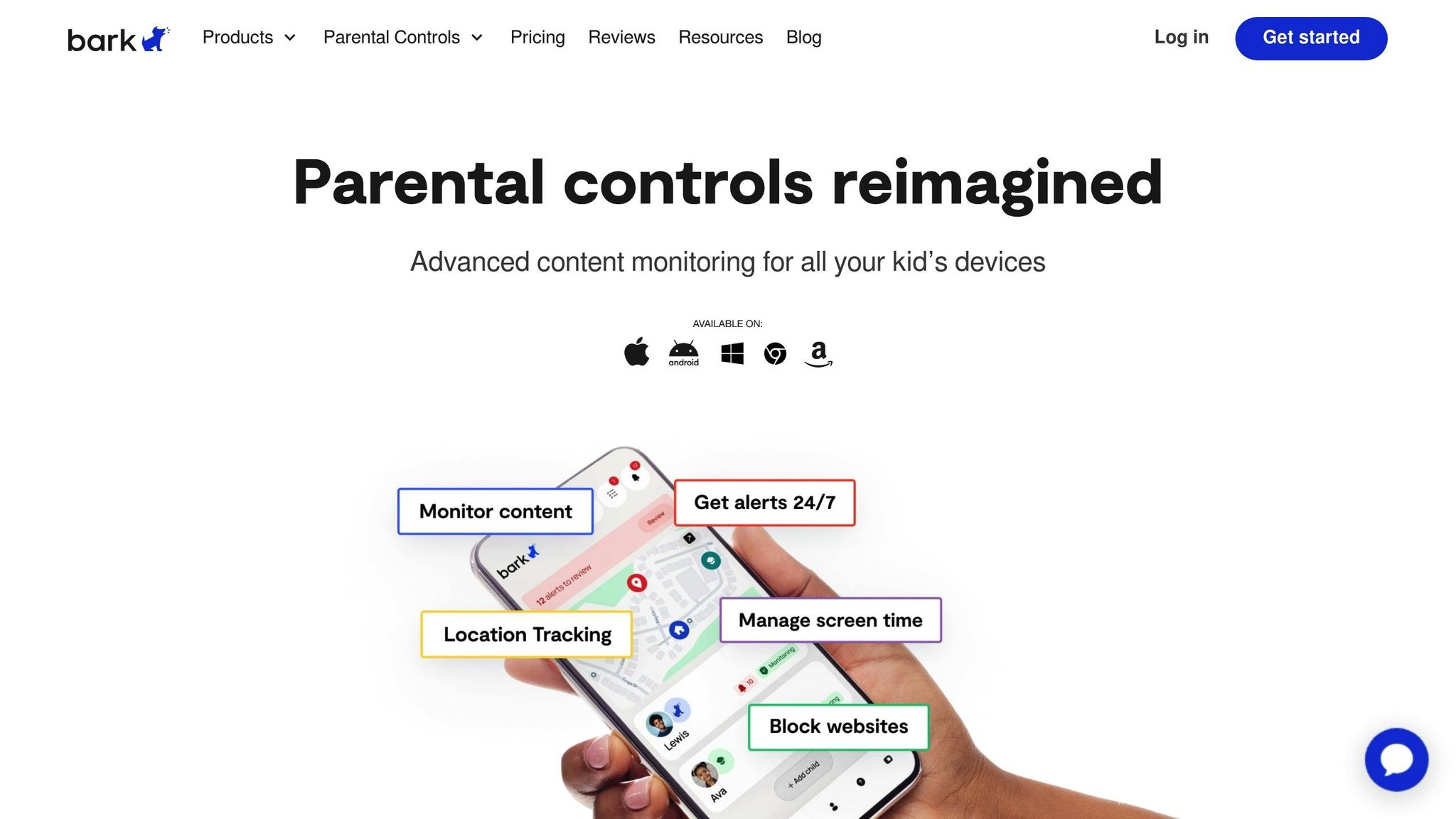

Bark Review: Is It The Best Parental Control For 2025?

AI-Driven Messaging Security: Advanced Capabilities

AI-driven messaging security takes protection to a new level by using machine learning to analyze conversations in context, identifying subtle threats that static filters often miss.

Real-Time Monitoring and Contextual Analysis

Unlike basic keyword detection, AI-powered messaging security systems analyze the tone and context of conversations to differentiate harmless chats from potential risks like grooming or abuse. These systems are trained on a wide range of data, enabling them to recognize concerning language patterns - even when users rely on abbreviations, misspellings, or coded phrases. This reduces false alarms while ensuring genuine threats are flagged. By understanding context, these systems strike a balance between safeguarding privacy and enabling proactive intervention.

Privacy, Trust, and Child Independence

One of the standout features of AI-driven solutions is their ability to protect children without invading their privacy. Instead of giving parents unrestricted access to all messages, these systems use intelligent filters to flag only content that requires attention. This approach ensures that normal conversations remain private while potential risks are addressed. Guardii exemplifies this balance, stating, "We believe effective protection doesn't mean invading privacy. Guardii is designed to balance security with respect for your child's development and your parent-child relationship."

These systems also adapt as children grow, gradually adjusting the level of monitoring to encourage responsible online behavior. This graduated approach fosters open communication about safety while respecting a child's need for privacy and independence.

Actionable Alerts and Evidence Preservation

AI-driven messaging security doesn't just flag issues - it provides parents with actionable insights. Alerts are concise, offering context and guidance for next steps, while securely preserving evidence for further action. For instance, Guardii’s platform not only notifies parents about potential risks but also retains critical evidence for intervention. This is particularly important given that only 10–20% of online predation incidents are reported, up to 8 out of 10 grooming cases start in private messaging channels, and online grooming cases have surged by over 400% since 2020, with sextortion cases increasing by more than 250% in the same period.

sbb-itb-47c24b3

AI vs. Standard Controls: A Direct Comparison

To strengthen child protection in digital spaces, it's essential to understand the differences between AI-driven security tools and traditional parental controls. When it comes to messaging safety, these two approaches diverge significantly in performance, particularly in areas that matter most to families.

Traditional parental controls are built on manual blocklists, which focus on filtering specific keywords. In contrast, AI-driven systems go beyond keywords, analyzing the context of conversations to detect subtle signs of cyberbullying or predatory behavior. This advanced capability allows AI to flag risks that might otherwise go unnoticed, offering a more comprehensive approach to online safety.

Another key distinction lies in how quickly these systems respond to threats. AI-powered tools operate in real time, analyzing vast amounts of data instantaneously. This enables faster detection and response to online dangers compared to traditional systems, which rely on human oversight and predefined rules. In fast-moving situations, this delay in reaction time can make a significant difference.

AI systems also excel in adaptability. They continuously learn from new data, identifying patterns and anomalies without requiring manual updates. Traditional controls, on the other hand, struggle to keep up with emerging threats, often requiring constant human intervention to stay relevant.

Comparison Table: Key Metrics

| Criteria | AI-Driven Security | Traditional Parental Controls |

|---|---|---|

| Detection Accuracy | Contextual analysis catches subtle threats and coded language | Keyword filtering often misses sophisticated risks |

| Response Speed | Real-time threat detection | Delayed responses due to manual updates |

| Privacy Impact | Selective monitoring protects normal conversations | Full access to communications can breach privacy |

| Ease of Use | Automated insights and threat prioritization | Requires manual setup and frequent updates |

| Adaptability | Learns from new threats automatically | Needs manual updates for emerging risks |

| Child Independence | Adjusts monitoring based on age and maturity | Rigid controls that don’t evolve with the child |

| Alert Quality | Contextual, prioritized alerts | Generic notifications that may lead to alert fatigue |

A notable advantage of AI-driven systems is their ability to balance safety with privacy. By analyzing entire conversations for patterns rather than giving parents full access to every message, AI tools help maintain trust between parents and children. This trust is crucial for fostering a healthy parent-child relationship, whereas traditional controls often impose all-or-nothing monitoring that can strain this bond.

AI also brings a smarter approach to screen time management. Instead of enforcing rigid schedules, AI can adjust limits based on a child's behavior and usage patterns. Traditional controls, by contrast, rely on fixed time blocks, which fail to account for context or the nature of the child’s activities.

The effectiveness gap is stark when addressing issues like cyberbullying. In 2023, over 75% of cyberbullying complaints came from children under 16. Traditional parental controls often lack the detail and real-time intervention needed to tackle such challenges effectively, highlighting the growing importance of AI-driven solutions in safeguarding young users online.

Best Practices for Age-Appropriate Messaging Security

Finding the right balance between protection and trust is key when it comes to messaging security for kids. Adjusting security settings to fit their age and maturity helps safeguard them while encouraging independence.

Customizing Security Features by Age

Security needs shift as children grow, and the tools you use should reflect those changes. What works for a seven-year-old won’t necessarily suit a teenager.

For younger kids (ages 6–9), strict controls are essential. Content filters powered by AI can block inappropriate material and provide clear activity summaries. Creating designated "safe zones" for online interaction ensures close supervision during this early phase of digital exploration.

As pre-teens (ages 10–12) start to navigate the online world more independently, security settings should evolve. AI tools can adjust monitoring levels to match their maturity. At this stage, it’s helpful to set clear screen time limits and establish rules for safe browsing and respectful online behavior.

Teenagers (ages 13 and older) need a different approach. While they deserve more autonomy, safety measures should still be in place. AI systems can adapt to their behavior, offering selective monitoring that respects their growing independence. Tailored content filters and open discussions about safe online practices can help them make informed decisions.

Encouraging Open Communication and Digital Literacy

Teaching kids about online safety isn’t just about setting rules; it’s about having ongoing conversations. Technology can support these efforts but shouldn’t replace the importance of open dialogue. Start by establishing clear guidelines for acceptable use and screen time, and make it easy for them to talk to you about any unsettling online experiences.

Help them recognize signs of suspicious behavior, like unsolicited messages, and encourage them to report anything that feels off. Regular family discussions about their digital experiences create opportunities to address concerns early and reinforce critical thinking skills. These conversations also teach them how to verify information they encounter online.

Balancing safety with independence means teaching strong cybersecurity habits - like using secure passwords - while encouraging a healthy mix of online and offline activities.

Using AI Tools Like Guardii

AI tools like Guardii can be a powerful addition to your digital parenting toolkit. They offer selective monitoring that flags real risks without invading everyday conversations. By focusing on patterns rather than reviewing each message, Guardii strikes a balance between safety and privacy.

This approach helps address concerns that too much monitoring might harm trust within families. Guardii’s user-friendly dashboard provides clear insights and real-time alerts, allowing parents to respond quickly to potential threats while respecting their child’s growing independence.

When using tools like Guardii, it’s crucial to prioritize transparency. Ensure the tool clearly explains how data is collected, stored, and used. Look for features that adapt as your child matures, focusing not just on blocking harmful content but also on educating and empowering them. Features like evidence preservation can help facilitate educational discussions, teaching kids how to navigate the digital world safely.

With real-time alerts and activity reports, tools like Guardii offer a way to stay informed without being overly intrusive. They complement broader AI-driven messaging security measures, supporting parents in creating a safe and trusting digital environment for their children.

Conclusion: Choosing the Right Approach for Your Family

When it comes to deciding between AI-driven messaging security tools and traditional parental controls, the choice often hinges on how well the solution can keep up with new and evolving threats. Traditional methods tend to rely on static rules, which can leave gaps in protection. On the other hand, AI-based tools like Guardii adjust in real time, offering a more dynamic and responsive safeguard for your family.

Concerns about online safety are widespread. A 2022 survey by the American Psychological Association found that 47% of parents worry about their child's online activities, while research from the Cyberbullying Research Center shows that about 15% of kids aged 12-17 experience cyberbullying. These statistics highlight the growing need for smarter, more adaptive solutions to protect children online.

Experts agree that AI brings a unique advantage. Dr. Sarah Chen, a child safety specialist, explains:

"AI serves as a vigilant guardian by processing vast data in real time to detect subtle threats and identify adults who may be posing as children. AI's adaptive monitoring delivers tailored protection while supporting healthy digital development".

This level of protection is reflected in parental preferences. According to a study published in the International Journal of Information Management, 71% of parents favor AI-based parental control solutions, citing their ability to adapt and respond effectively to emerging challenges. Unlike traditional tools that simply block content, AI solutions like Guardii help children learn to make better choices online by tailoring guidance to their age, maturity, and behavior.

Guardii strikes a balance between vigilance and trust. It focuses on genuine threats while steering clear of unnecessarily invading everyday interactions. Parents receive actionable alerts and can preserve evidence when needed, all while maintaining transparency and fostering open communication within the family.

Ultimately, the best solution is one that meets your family’s unique needs. Look for tools with clear privacy policies, customizable settings for different age groups, and features that grow with your child. Technology should enhance - not replace - ongoing conversations about staying safe in the digital world.

AI-powered solutions like Guardii offer a forward-thinking approach to keeping kids safe online. By evolving alongside new threats and your child’s growing independence, these tools help create a safer and more supportive digital environment for everyone in the family.

FAQs

How do AI-powered parental controls protect children while respecting their privacy?

AI-powered parental controls leverage cutting-edge technology to keep an eye on messaging behavior, spotting potential dangers like predatory actions or harmful content. Unlike older methods, these tools zero in on patterns and context rather than scanning every single message, making them far more accurate and efficient in ensuring safety.

By focusing only on suspicious activities and avoiding excessive data collection, these systems strike a balance between protection and privacy. This approach builds trust between parents and children, creating a safer online environment while respecting personal boundaries.

What challenges do parents face with traditional parental controls on messaging apps?

Parents often face hurdles when using traditional parental controls for messaging apps. These tools often fall short when it comes to tracking disappearing messages or encrypted chats, making it tough to keep kids safe online. On top of that, tech-savvy children can sometimes outsmart these controls, leaving parents with a false sense of security.

Another tricky aspect is finding the right balance between privacy and supervision. Many older tools rely on intrusive monitoring methods that can strain the trust between parents and their kids. And let’s face it - no system is perfect. Traditional controls might miss harmful content or predatory behavior, leaving critical gaps in protection. Modern AI-powered solutions aim to tackle these shortcomings, creating a safer online space for children.

How do AI tools like Guardii keep up with children’s changing online habits as they grow?

AI tools such as Guardii are built to keep up with the changing ways children interact online. By analyzing real-time activity and using predictive technology, these tools can fine-tune safety settings to match a child's age and stage of development, ensuring their online experience stays safe and appropriate.

Guardii learns from usage patterns and communication habits, offering personalized protection that grows with your child. It can block harmful content, identify potential risks, and create a safer digital space - all while maintaining respect for their privacy.