AI-Powered Dashboards: How They Keep Kids Safe

AI-powered dashboards are changing how parents protect their kids online. These tools use advanced systems to monitor digital activity, detect risks like cyberbullying or harmful content, and notify parents in real time. Unlike traditional parental controls, they analyze behavior patterns, provide context-aware alerts, and tailor safety measures to each child’s age.

Key highlights:

- Threat Detection: Scans for harmful content, cyberbullying, and predatory behavior.

- Real-Time Alerts: Notifies parents of risks with clear, actionable guidance.

- Privacy-Focused: Balances safety with respect for kids’ digital autonomy.

- Age-Based Protection: Adjusts controls based on developmental stages.

- Compliance with U.S. Laws: Meets privacy standards like COPPA and state regulations.

These dashboards help parents stay informed without constant supervision, offering a safer way to navigate the digital world.

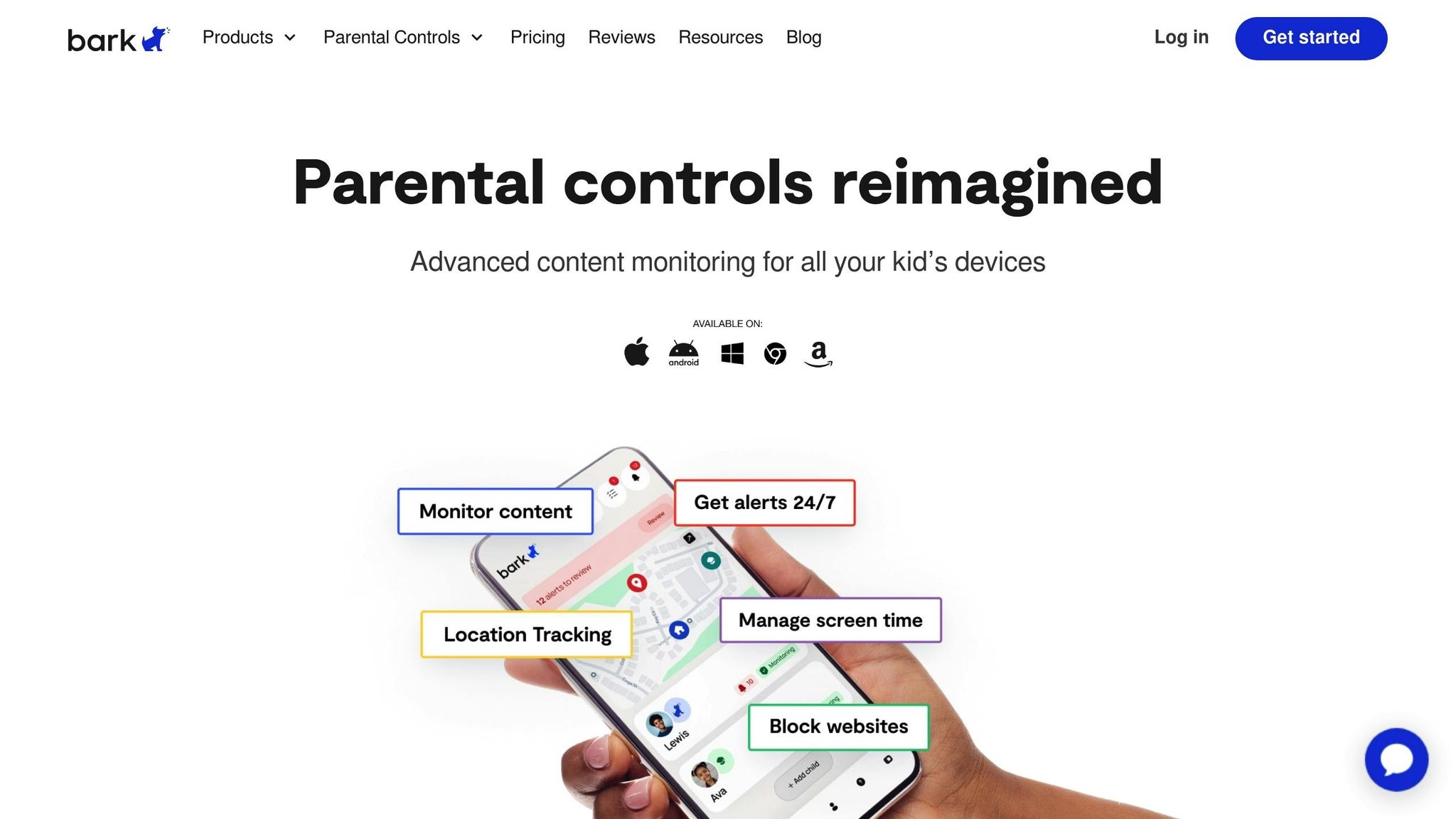

Bark Review: Best Parental Control for 2025?

Key Features of AI-Powered Dashboards

AI-powered dashboards bring advanced tools to the table, stepping beyond basic parental controls to provide a robust layer of protection. Using cutting-edge algorithms, they strike a balance between safeguarding users and respecting privacy. Here's how these tools deliver focused, real-time protection.

Harmful Content Detection and Monitoring

AI algorithms work tirelessly to scan online content across various platforms - analyzing posts, images, videos, and direct messages to identify harmful material. This 24/7 monitoring is faster and more thorough than what human moderators can achieve.

These systems are designed to detect bullying behaviors in texts, emails, and social media by analyzing language patterns and sentiment. They can also identify explicit material and flag harmful language. Importantly, they play a vital role in identifying and removing child sexual abuse material (CSAM). When concerning communication patterns are spotted, the system sends alerts to parents, warning them of risks like cyberbullying or interactions with strangers.

Real-Time Alerts and Clear Guidance

One standout feature of AI-powered dashboards is their ability to deliver instant notifications when potential risks arise. These real-time alerts inform parents about activities such as accessing restricted content, spending excessive time on certain apps, or engaging with suspicious contacts. The system also provides data-driven recommendations to help parents manage screen time more effectively.

These alerts are prioritized based on urgency, ensuring that parents can focus on the most critical issues. For example, targeted notifications highlight harmful searches, inappropriate messages, or visits to risky websites. This feature is especially significant given the statistics: in 2023, over 75% of cyberbullying complaints came from children under 16, and a CDC survey revealed that 50.4% of teens aged 12–17 spend more than four hours daily on screens.

Smart Filtering That Understands Context

Smart filtering takes content moderation to the next level by ensuring that only genuine threats are flagged. Unlike traditional filters, which often miss subtle threats or block harmless content, smart filtering uses machine learning and Natural Language Processing (NLP) to grasp the context and sentiment behind text-based content. This technology can identify toxic language while distinguishing between harmless discussions and actual threats.

A 2023 study by researchers from Seoul National University and LG AI Research highlighted this advancement. Their system, called LATTE (LLMs, Or ToxiciTy Evaluator), demonstrated a remarkable ability to accurately identify both toxic and non-toxic content.

"First, provided that the definition of toxicity can vary dynamically depending on diverse contexts, it is essential to ensure that the metric is flexible enough to adapt to diverse contexts." - Researchers from Seoul National University and LG AI Research

Contextual understanding is essential in tackling online threats. A 2022 Pew Research Center study found that half of young Americans have faced online bullying. Additionally, nearly three in ten children globally have experienced racially motivated cyberbullying, with the highest rates reported in India and the United States. By considering factors like demeaning content and ethical preferences, smart filtering minimizes false alarms while maintaining strong defenses against genuine threats.

These systems also help redirect AI away from areas rife with harmful content, such as hate speech and misinformation, guiding interactions toward safer online experiences. By combining automated detection with contextual insights, smart filtering provides accurate and dependable protection for children navigating the digital world.

Balancing Privacy and Oversight

Protecting kids online while respecting their privacy is a delicate balancing act. AI-powered dashboards tackle this challenge by offering tools that provide parents with crucial safety insights without stepping into every corner of their child’s digital world. Instead of constant monitoring, these platforms focus on strategic oversight, delivering alerts only when necessary to keep kids safe.

Privacy-First Alert System

Modern AI dashboards take a privacy-first approach by alerting parents only when real risks arise. This method allows children to maintain a sense of autonomy while staying protected. Instead of flagging every single online interaction, these systems use advanced algorithms to differentiate between typical behavior and potentially dangerous situations.

For instance, platforms like Guardii notify parents when they detect predatory behavior or harmful content in direct messages. These alerts are specific and actionable, helping parents intervene appropriately. This targeted system fosters trust between parents and kids, as children understand that the focus is on safety, not control.

"Parental controls are most effective when they're used to support and protect – not to control."

Even the way alerts are delivered reflects this philosophy. Parents receive detailed information about potential threats, including evidence that can be preserved for law enforcement if needed, while routine conversations remain private. This ensures transparency without unnecessary intrusion into a child’s everyday activities.

Age-Appropriate Protection Levels

AI dashboards go a step further by tailoring protection levels to a child’s age and maturity. These platforms often include family management features that allow parents to customize settings based on each child’s developmental stage. This means a 7-year-old and a 15-year-old receive protection suited to their vastly different levels of digital understanding and independence.

For younger children, the system might provide broader monitoring and detailed activity summaries. As kids grow older, the focus shifts to identifying more serious risks like cyberbullying, predatory behavior, or harmful content, while giving them more privacy for day-to-day interactions.

Parents can create individual profiles for each family member, setting age-appropriate content filters, managing device usage times, and designating "tech-free zones" in the home. These systems also adapt to each child’s digital habits, learning what is typical behavior and flagging anything unusual.

Open communication is key. Parents should discuss the controls they’re implementing and invite questions from their kids. Revisiting these controls as children grow ensures that the systems evolve alongside their increasing independence. This approach aligns with stringent US privacy standards, creating a safer online environment for kids.

Meeting US Privacy Standards

AI dashboards must also comply with complex federal and state privacy regulations. The Children's Online Privacy Protection Act (COPPA) forms the backbone of these rules, requiring parental consent to collect personal data from children under 13. Violations can result in steep fines, with penalties reaching up to $43,792 per violation.

US privacy laws are changing rapidly. By January 2025, 19 states will require age verification to access potentially harmful content. The FTC has also updated COPPA, introducing new definitions, stricter data retention rules, and expanded consent requirements.

"Technology companies are on notice that [the Texas Attorney General's] office is vigorously enforcing Texas's strong data privacy laws. These investigations are a critical step toward ensuring that social media and AI companies comply with our laws designed to protect children from exploitation and harm." - Ken Paxton, Texas Attorney General

To stay compliant, AI dashboards now include robust age verification systems that accurately confirm users’ ages and secure parental consent. Many platforms also offer tools for parents to manage their child’s online experience, aligning with the trend toward more advanced parental controls. Additionally, local data processing options are becoming more common, reducing reliance on cloud storage and addressing privacy concerns.

Parents have an important role in these protections. By reviewing privacy policies with their kids and discussing the value of data security in age-appropriate terms, families can establish clear rules about what information can be shared. Regularly updating privacy settings ensures that these safeguards remain effective while respecting everyone’s rights.

sbb-itb-47c24b3

Early Threat Detection and Response

Expanding on earlier privacy-first measures, early threat detection takes protection a step further by identifying risks before they escalate. AI-powered dashboards operate around the clock, analyzing behavior patterns to catch unusual activity. These systems flag anomalies for quick action, helping shield children from online predators, cyberbullying, and harmful content. This forward-thinking approach paves the way for more precise behavioral monitoring.

Behavioral Monitoring for Warning Signs

AI is especially skilled at understanding a child’s usual online habits and spotting anything out of the ordinary. By monitoring device activity in real time, it examines usage patterns, content interactions, and communication behaviors. When something deviates from the norm - like a sudden interest in concerning material - the system immediately flags it for review.

Unlike basic keyword detection, modern AI digs deeper. It scans for specific terms tied to bullying, self-harm, or inappropriate content, while also considering the context and communication patterns. For instance, it can detect grooming behaviors, inappropriate language, or attempts to gather personal details. It also tracks aggressive language, repeated negative interactions, and other red flags that could indicate bullying.

"AI acts like a vigilant guardian, processing thousands of conversations in real-time to spot patterns that might escape human detection. It's particularly effective at identifying adults who may be posing as children." – Dr. Sarah Chen, child safety expert

Immediate Response to Detected Threats

When a threat is identified, the AI system doesn’t just observe - it acts. Alerts are sent immediately, offering detailed information about the risk so swift action can be taken.

"The technology acts like a vigilant digital guardian", explains Dr. Maria Chen, a cybersecurity expert specializing in child safety. "It can detect subtle signs of harassment that humans might miss, while respecting privacy boundaries."

These systems also block harmful content and suspicious contacts automatically, maintaining continuous protection. Some AI moderation tools have proven capable of removing over 90–95% of harmful material before it even reaches users. For example, in one case, a middle school counselor used an AI-based tool to uncover and address cyberbullying incidents that might have otherwise gone unnoticed.

Personalized Recommendations for Parents

AI dashboards don’t stop at detection - they provide parents with tailored advice to address potential threats. When an issue arises, parents receive actionable tips, such as how to have open conversations about online safety, explain the misuse of AI in a way kids can understand, and teach them to verify the credibility of information they encounter.

"Ensure your child knows they can talk to you or another safe adult like a teacher if anything worries them online or offline." – Kate Edwards, Associate Head of Child Safety Online, NSPCC

These dashboards also guide parents on discussing online risks, setting screen time rules, and restricting access to unsafe websites or apps. By combining these tools with open communication, parents can create an environment where children feel comfortable sharing their online concerns instead of keeping them hidden. This collaborative approach helps kids navigate the digital world with confidence while staying safe.

User-Friendly Design and Clear Communication

Effective AI dashboards for child safety combine thoughtful design with straightforward communication, making it easier for families to protect their children online. These tools focus on simplicity and clarity, ensuring parents don’t need to be tech experts to keep their kids safe.

Simple Interface and Clear Alerts

Modern AI dashboards are built with user-friendly navigation that feels natural to parents accustomed to everyday apps. They feature clean layouts, easy-to-read fonts, and simple menus. Key actions are highlighted with prominent buttons, making it easy to access important information quickly. Many dashboards also use intuitive color coding - like green for safe activity and red for alerts - so parents can grasp the situation at a glance.

When an issue arises, the system sends clear, actionable notifications. For example, instead of bombarding parents with technical terms, a message might say: "Your child attempted to visit a blocked site at 4:15 PM. Would you like to review this activity?" This kind of plain language ensures parents understand what’s happening and can respond appropriately.

Dashboards typically display recent alerts, timelines of activity, and quick-access tools, using intuitive icons and color-coded visuals to simplify decision-making. To accommodate all families, many platforms offer multi-language options and accessibility features like screen reader support, adjustable text sizes, and high-contrast modes. This thoughtful design makes these tools accessible and effective for a wide range of users.

Building Trust Through Transparency

Trust is a cornerstone of effective AI dashboards. These tools provide detailed activity logs and clear explanations about what data is being monitored and why. Parents can customize settings to match their child’s needs, focusing on specific concerns like cyberbullying for a middle schooler or predatory behavior for a younger child. This flexibility ensures the system adapts to each family’s unique situation.

Transparency also extends to involving children in the process. Parents can use the dashboard to explain online safety rules and show how monitoring works. This approach helps kids understand that these tools are there to protect them, not to invade their privacy. It also encourages open conversations about responsible online behavior.

Platforms like Guardii take this a step further by focusing on detecting harmful content and predatory behavior in direct messages while respecting privacy. By providing clear alerts and customizable options, these tools empower parents to act confidently and responsibly.

The input of child safety experts, psychologists, and educators shapes these dashboards, ensuring they strike the right balance between safety, privacy, and usability. This expert guidance helps create tools that parents can trust and children can accept, fostering an environment where families can prioritize both safety and open communication in the digital world.

Conclusion: AI-Powered Dashboards as a Complete Safety Solution

AI-powered dashboards bring together advanced tools and privacy-focused features to offer a robust solution for child online safety. By blending early threat detection, real-time monitoring, and transparent practices, these systems create a safety net tailored to each child’s online habits.

With real-time monitoring, parents can act quickly when risks arise, and predictive analytics provide the opportunity to address potential threats before they escalate. This proactive approach aligns with the needs of modern families, offering peace of mind in an increasingly digital world.

What makes these dashboards stand out is their commitment to privacy. By balancing effective monitoring with respect for children’s privacy, these tools build trust within families. Their transparent design ensures children understand that monitoring is there to protect them, not invade their space.

For families in the United States, platforms like Guardii exemplify these qualities. Guardii provides a trusted system that monitors, detects, and blocks harmful content and predatory behavior on direct messaging platforms, giving parents the protection they need without compromising trust.

These data-driven tools remove much of the uncertainty from digital parenting. Parents can focus on guiding their children rather than constantly supervising them, fostering a balanced and secure environment. By bridging safety and trust, AI-powered dashboards help families encourage healthy online habits while ensuring safer digital experiences for children.

FAQs

How do AI-powered dashboards protect kids online while respecting their privacy?

AI-powered dashboards help keep children safe online by utilizing sophisticated tools to monitor and identify harmful content or predatory behavior. These systems work in real time, analyzing text, images, and online interactions to alert parents about potential risks - all while respecting privacy and avoiding unnecessary exposure of personal details.

By concentrating on critical safety threats and steering clear of intrusive tracking, these dashboards serve as digital guardians. They create a safer online space for kids while maintaining a sense of trust and independence between parents and children. This approach ensures kids are protected without feeling like they’re constantly being watched.

How do AI-powered dashboards ensure compliance with U.S. privacy laws like COPPA?

AI-powered dashboards align with COPPA requirements by incorporating strong parental consent measures. For example, they ensure verifiable approval is obtained before collecting any data from children. Additionally, they stick to strict data minimization principles, gathering only the information needed to deliver their services and storing it securely to block unauthorized access.

To keep parents in the loop, these dashboards offer clear, straightforward notices explaining what data is collected and how it will be used, all in line with the latest FTC guidelines. By focusing on privacy and safety, they not only safeguard children but also build trust between families and technology.

How can parents personalize AI-powered dashboards to support their child's online safety and growth?

Parents can tailor AI-powered dashboards to suit their child's specific needs by tweaking settings based on age, maturity, and online behavior. For example, they can set up custom content filters to block unsuitable material, impose screen time limits, and track app usage to ensure interactions remain safe and appropriate for their age.

These dashboards also offer insights into your child's online activities, allowing you to spot patterns and adjust settings as they develop. By consistently refining these tools, parents can create a safer online space while promoting balanced and healthy digital habits.