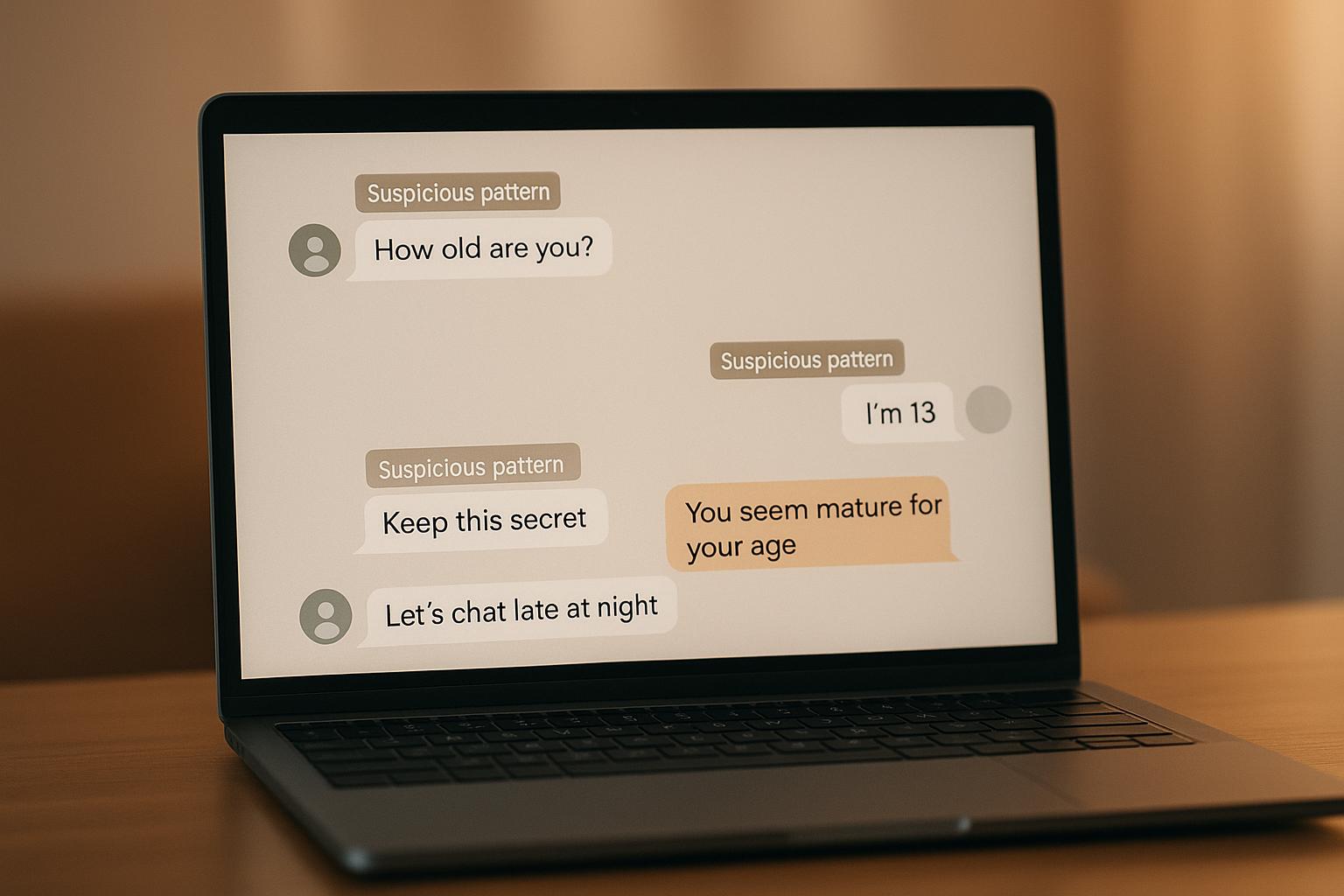

5 Key Predator Grooming Patterns AI Detects

Online predators use calculated steps to manipulate and exploit children online. With kids spending more time on messaging apps, social media, and gaming platforms, traditional safety measures like parental oversight are often insufficient. AI systems, like Guardii, are stepping in to identify grooming behaviors early, offering real-time monitoring and intervention. Here's a quick breakdown of the five grooming tactics AI is designed to detect:

- Flattery and Emotional Manipulation: Predators use excessive praise to build trust and emotional dependency.

- Isolation from Trusted Adults: They create secrecy, discouraging kids from sharing conversations with parents or friends.

- Gradual Introduction of Inappropriate Topics: Conversations shift slowly from harmless to harmful, normalizing inappropriate behavior.

- Testing Boundaries and Desensitization: Predators push limits incrementally, gauging resistance and exploiting vulnerabilities.

- Requesting Personal Information or Meetings: They escalate to seeking sensitive details or arranging in-person encounters.

AI tools analyze patterns, context, and emotional tone to detect these behaviors early, helping protect children before harm occurs. Guardii also ensures flagged interactions are documented for parental review and potential legal action.

Roblox’s New AI Catches Predators Before They Strike - Sentinel Explained

How Predator Grooming Works in Digital Spaces

Online grooming is a calculated process where predators build trust with children to exploit them emotionally, psychologically, or sexually. This isn't a random act - it’s a deliberate, step-by-step approach that can stretch over weeks or even months, making it particularly hard to spot with casual observation.

The grooming process typically unfolds in four stages:

First, predators seek out vulnerable children. They look for signs like loneliness, family struggles, or low self-esteem - traits that make children more open to manipulation.

In the relationship-building phase, predators position themselves as trusted friends or mentors. They spend time learning about the child’s hobbies, challenges, and daily life. This phase can last weeks as they work to become someone the child feels truly understood by - more so than by their parents or peers. AI tools can flag these drawn-out conversations, especially when they involve repeated discussions of personal routines.

The isolation and dependency stage is where predators shift gears. They create an "us versus them" narrative, presenting themselves as the child’s main source of emotional support while subtly eroding the child’s trust in family and friends. Secrecy becomes a key theme, with predators convincing children that keeping their conversations private strengthens their "special bond."

In the final exploitation phase, predators introduce inappropriate topics or requests, such as asking for personal details, photos, or even in-person meetings. By this point, the child often feels emotionally tied to the predator, making it harder for them to refuse requests they might otherwise reject. This gradual, multi-step approach in digital spaces makes detection especially challenging, requiring sophisticated AI tools.

Digital platforms add another layer of complexity. Predators often communicate across multiple apps, making it harder for parents to monitor. Features like disappearing messages, private gaming chats, and encrypted platforms allow predators to cover their tracks.

The timeline of grooming further complicates detection. Individual messages might seem innocent, but when viewed as part of a weeks-long pattern, the predatory intent becomes clear. Parents checking their child’s messages occasionally are unlikely to notice these subtle shifts.

AI systems help bridge this gap by analyzing conversation patterns and spotting red flags - like overly frequent compliments or encouragement of secrecy - that signal grooming behavior. These patterns remain consistent even when predators alter their tactics.

What sets AI apart is its ability to intervene in real time. Instead of identifying grooming only after harm has occurred, AI can flag concerning behavior early in the relationship-building stage. This early detection is critical, as it allows for intervention before the child becomes deeply emotionally entangled.

1. Flattery and Emotional Manipulation

Flattery often serves as the first step in grooming, offering a glimpse into how predators manipulate trust over time. This isn’t about simple friendliness - it's a calculated strategy designed to lower a child's natural defenses and create a sense of emotional dependency.

Recognizing Behavioral Patterns

AI systems are trained to spot grooming by analyzing specific conversational habits that distinguish manipulative flattery from genuine interactions. Predators tend to escalate emotionally quickly, moving from casual conversation to intense, personal compliments. Phrases like, "You're so mature for your age," or "You're the only one who understands me," are red flags, as they appear far more often in predatory interactions than in normal conversations between peers.

Machine learning models, trained on vast datasets of chat logs, are particularly adept at identifying these patterns. They track repeated instances of praise and attempts to establish emotional intimacy at an unnatural pace.

Context-Aware Analysis

Modern AI doesn’t just look for keywords - it examines the broader context of conversations. For example, it evaluates the age gap between participants, the timing and frequency of compliments, and whether flattery is paired with other suspicious behaviors. By analyzing how quickly emotional language intensifies or whether secrecy becomes a recurring theme, these systems can differentiate between innocent exchanges and manipulative intent. This contextual awareness allows for a more nuanced understanding of predatory behavior.

Tracking Patterns Over Time

AI tools also monitor how flattery evolves across multiple interactions. Predators often begin with mild compliments, gradually increasing their praise to build trust. Over time, this flattery may be followed by attempts to isolate the child or introduce inappropriate topics. By analyzing the timing and sequence of messages, AI can detect grooming efforts even when they unfold slowly over weeks or months.

Sentiment and Keyword Analysis

Advanced AI models like DistilBERT and LLaMA specialize in analyzing the emotional tone of conversations. They can distinguish between natural, positive exchanges and manipulative flattery by focusing on contextual cues, the intensity of compliments, and sudden emotional bonding. These models excel at identifying subtle shifts in tone that traditional machine learning might miss, making them powerful tools in detecting harmful behavior.

Platforms like Guardii leverage these AI-driven methods to monitor direct messaging platforms. By analyzing language patterns, emotional tone, and conversational context, they aim to identify and block harmful interactions before manipulation can take root.

2. Isolation from Trusted Adults

Once predators gain a child’s trust through flattery, they often take the next step: isolating them from trusted adults. This tactic allows them to control conversations without interference from parents or guardians.

Recognizing Patterns of Isolation

AI systems are equipped to spot isolation tactics by analyzing secretive language. For instance, if a predator encourages a child to hide their online interactions from their parents or other trusted figures, the AI flags these messages by examining the context and specific language patterns.

Tracking Shifts in Communication

Predators often escalate isolation efforts by moving conversations from public or monitored platforms to private channels. AI tools monitor this progression, identifying when discussions transition to direct messages, video calls, or other less-regulated apps.

Identifying Concealment Strategies

Advanced AI technology also scans for keywords linked to concealment. Predators might suggest using apps disguised as harmless tools to hide their communications. These subtle cues are critical in identifying potential risks.

Guardii’s AI-powered monitoring actively analyzes these behaviors across various messaging platforms. By detecting changes in conversation patterns and the use of secretive language, it plays a vital role in protecting children from being cut off from their trusted support systems.

3. Gradual Introduction of Inappropriate Topics

Once predators have gained trust and isolated their target, they often shift their tactics to more harmful behaviors. They begin introducing inappropriate content gradually, making the behavior seem normal over time. This step-by-step approach is designed to avoid alarming the child while furthering the predator’s agenda. These tactics build on the earlier phases of flattery and isolation.

Recognizing Behavioral Patterns

AI systems are particularly skilled at spotting the subtle ways predators escalate conversations. What might start as innocent chats about relationships, growing up, or physical changes can slowly evolve into more explicit discussions. Predators often start with casual mentions of adult topics, then move to direct questions and, eventually, requests for explicit images or discussions.

AI detection tools track these shifts by analyzing how conversations change over time, flagging when topics move from harmless to concerning.

For instance, AI can detect when an adult begins asking personal questions about a child’s body, their relationships with peers, or experiences with physical intimacy. While these questions might seem harmless on their own, when viewed as part of a larger pattern, they raise red flags.

Context Matters

One of the strengths of modern AI systems is their ability to evaluate context. A conversation about puberty between a parent and child is vastly different from a similar discussion initiated by a stranger online.

AI algorithms take into account factors like the relationship between the participants, the child’s age, the tone of the conversation, and the setting. This context-aware analysis helps differentiate between legitimate educational discussions and predatory grooming attempts.

Timing and frequency also play a role. Predators often space out inappropriate comments across multiple conversations to avoid suspicion. However, AI systems can track these interactions over time, identifying patterns that might otherwise go unnoticed.

Tracking Long-Term Patterns

Predators typically don’t jump straight into explicit content. Instead, they escalate their behavior gradually, testing boundaries along the way. Temporal analysis helps AI systems detect these shifts by examining how interactions evolve over weeks or months.

By creating a timeline of conversations, AI tools can identify concerning patterns that might not be obvious when looking at individual messages. For example, predators may introduce mildly inappropriate topics, observe the child’s reaction, and either retreat or push further based on the response. AI systems are designed to recognize these cycles of testing and escalation.

Combining Keywords and Sentiment Analysis

AI tools go beyond simple word detection by analyzing both the language and tone of messages. Predators often use subtle or misleading language to manipulate their targets, framing inappropriate questions or comments as “helpful” or “educational.”

Sentiment analysis can uncover manipulative undertones that might not be obvious at first glance. For instance, a predator may disguise harmful intentions by presenting them as guidance or support. AI can detect this emotional manipulation.

Keyword analysis has also advanced to recognize intent. Instead of just flagging specific words, AI systems analyze how common terms are used in unusual ways or when innocent words are combined in ways that suggest inappropriate motives.

Guardii’s AI system integrates these methods to strengthen its ability to protect children. By analyzing behavioral patterns, understanding context, tracking long-term interactions, and examining both keywords and sentiment, it identifies concerning conversations early - before they escalate into more serious harm.

sbb-itb-47c24b3

4. Testing Boundaries and Desensitization

After laying the groundwork in earlier stages, predators move into a calculated phase where they push limits to normalize harmful behavior. This phase is all about testing boundaries and gradually desensitizing children to inappropriate requests. Predators use this time to measure resistance and pinpoint vulnerabilities they can exploit. It’s a deliberate strategy that sets the stage for even more manipulative tactics later on.

Recognizing Behavioral Patterns

AI systems are highly effective at spotting the subtle ways predators test boundaries. These individuals often escalate their behavior in small, deliberate steps, alternating between crossing lines and retreating when they sense resistance. This back-and-forth approach creates a cycle where children slowly become accustomed to inappropriate requests without fully realizing the manipulation.

Some common tactics include urging children to delete message histories, requesting increasingly personal photos, or introducing questionable games. AI tools monitor these escalating behaviors, flagging conversations where adults systematically push for more inappropriate interactions.

Context-Sensitive Detection

Boundary testing doesn’t look the same in every situation - it varies depending on the relationship dynamics. For example, a predator targeting a shy, compliant child may use gentler, more persistent tactics, while they might take a more direct approach with a confident, assertive child. AI systems are designed to analyze these nuances, identifying manipulation attempts across different personality types and situations.

Timing plays a critical role too. Predators often choose moments when children are alone, stressed, or seeking emotional support to test boundaries. AI tools can pick up on these patterns, flagging interactions that escalate during vulnerable times. By combining an understanding of context with timing, these systems can better detect a predator’s intent.

Tracking Temporal Patterns

Desensitization doesn’t happen overnight - it’s a slow process that unfolds over time. This makes tracking the sequence of events essential for detection. AI systems monitor how requests evolve, identifying when isolated incidents are actually part of a larger, calculated effort to break down boundaries.

Predators often space out their inappropriate requests to avoid raising immediate suspicion. For instance, they might make a questionable comment, then shift back to normal conversation for weeks before testing again. AI tools can identify when these boundary tests become more frequent or intense, signaling an escalating threat.

Analyzing Language and Sentiment

Another critical layer of detection involves examining the language predators use and the emotional tone behind their messages. In many cases, predators disguise inappropriate requests as something harmless - calling explicit photos “art” or framing inappropriate conversations as “educational.”

AI systems analyze these manipulative language patterns, focusing on how predators combine inappropriate requests with tactics like excessive praise, emotional manipulation, or subtle threats. By tracking shifts in tone and sentiment during these phases, AI can detect manipulation even when predators avoid using blatantly problematic keywords.

Guardii’s AI system integrates all these methods - behavioral tracking, context analysis, temporal monitoring, and language sentiment analysis - to identify boundary testing and desensitization in real-time. By catching these patterns early, it can step in before predators succeed in breaking down a child’s natural defenses.

5. Requesting Personal Information and Meetings

As grooming progresses, predators often escalate their tactics, shifting toward requests for personal information and arranging face-to-face meetings. Once trust has been cultivated, they grow bolder in seeking sensitive details such as home addresses, school names, daily routines, and more. This stage is particularly dangerous as it bridges the gap between online manipulation and potential real-world harm.

Recognizing Behavioral Patterns

AI systems are particularly adept at detecting the behavioral changes that signal when predators enter a phase of information gathering. These individuals often become more persistent, employing tactics like time pressure to extract private details. Their approach usually follows a predictable pattern - starting with vague questions about general locations and gradually narrowing to specifics, such as exact addresses or times when children are likely to be alone.

Predators often disguise their intentions by framing these requests as acts of kindness or exciting opportunities. For example, they may offer to send gifts, suggest meeting in public spaces like malls or theaters, or create elaborate stories to justify their demands. Additionally, they may increase their frequency of contact - moving from occasional messages to daily or even hourly communication - to foster a false sense of closeness and urgency, making it harder for children to resist.

Context-Aware Detection

AI tools go beyond surface-level monitoring by analyzing the history of interactions, communication patterns, and signs of vulnerability in the child. This enables the system to flag conversations that veer into dangerous territory. For instance, predators often time their requests for personal details during emotionally charged moments, such as when a child is seeking comfort or has just received excessive praise or gifts.

Geographic and demographic factors also play a role in detection. By considering local norms - like common meeting spots, school schedules, and conversational patterns - AI can identify requests that deviate from what would typically be considered acceptable or safe.

Tracking the Timeline of Escalation

The shift from casual conversation to explicit information requests often follows a clear timeline, which AI systems are designed to monitor. Predators typically start with minor, seemingly harmless questions before escalating to more invasive demands. If a child hesitates or refuses, they may revert to earlier grooming strategies - using flattery, emotional manipulation, or subtle pressure to wear down resistance.

By tracking these incremental changes, AI tools can recognize when interactions cross from benign to predatory, allowing for timely intervention.

Keyword and Sentiment Analysis

AI-powered language analysis plays a critical role in identifying when meeting requests are cloaked in manipulation. These systems evaluate both the explicit wording and the emotional undertones of conversations. Common strategies include framing the interaction as private or exclusive, discouraging children from discussing it with parents, or offering enticing rewards in exchange for personal information.

Sentiment analysis helps uncover emotional manipulation tactics, such as excessive flattery, manufactured urgency, or veiled threats. These cues often accompany requests for sensitive details, signaling a broader strategy to normalize unsafe behavior.

Predators may also attempt to make meeting proposals seem harmless by suggesting public locations, encouraging the child to bring friends, or creating elaborate scenarios about special events. However, legitimate adult figures rarely push for personal information or arrange meetings through private messaging, making such behavior a red flag.

Guardii’s AI tools are designed to intercept these risky exchanges, stopping them before they escalate into potential real-world danger. By combining behavioral analysis, contextual understanding, and language evaluation, the system provides a robust defense against predatory tactics.

How Guardii Uses AI for Child Safety

Guardii takes the fight against online predators to the next level by combining its analysis of grooming patterns with cutting-edge AI technology. The platform monitors direct messages across popular social media platforms in real time, targeting the very spaces where predatory behavior often unfolds. This AI-driven system forms the backbone of Guardii's protective features.

Real-Time Threat Detection and Analysis

At the core of Guardii's defense is its AI Grooming Detection Engine, which uses natural language processing (NLP) to spot manipulative and harmful conversation patterns as they happen. By analyzing both context and subtle language cues, the system can identify grooming behaviors and respond immediately.

To ensure accuracy, Guardii employs Smart Filtering technology. This feature helps distinguish between normal, age-appropriate interactions and genuinely concerning content. When harmful messages are detected, they’re instantly removed from the child’s view and flagged for further review - without disrupting everyday communication.

Behavioral Fingerprinting and Pattern Recognition

Guardii’s Predator Watchlist & Behavioral Fingerprinting technology creates anonymous profiles of suspicious users by comparing their interactions to known grooming markers. Using advanced machine learning, the system examines patterns in conversations, detecting threats with a high level of precision.

Comprehensive Content Protection

Guardii doesn’t stop at text. Its Image & Video Scan Engine is designed to identify harmful visual content, such as nudity or sexually explicit material. When flagged, these images or videos are immediately quarantined, and parents are notified to take appropriate action.

Additionally, the platform offers Comprehensive Activity Monitoring, which provides parents with detailed logs of messages, app activity, media shared, and device usage. These insights enhance the AI’s ability to differentiate between normal communication and activity that might signal a threat.

Parent Dashboard and Transparent Monitoring

The Parent Dashboard gives families a clear view of their child’s digital safety without overwhelming them with unnecessary details. Parents can track key metrics like "Threats Blocked", "Safety Score", and the platforms being monitored. This streamlined approach offers peace of mind without requiring parents to sift through every message.

When concerning activity is detected, the dashboard sends instant alerts with clear explanations and guidance on next steps. This minimizes false alarms while ensuring parents can act quickly when it matters most.

Evidence Preservation and Law Enforcement Integration

For serious threats, Guardii securely compiles evidence in a format that meets legal standards, making it easy to share with law enforcement. This feature ensures that predatory behavior is documented and ready for use in investigations.

The platform also includes simple reporting tools, allowing parents to escalate threats to authorities with just a few clicks. This seamless process supports law enforcement efforts to hold online predators accountable.

Adaptive Protection Levels

Children’s online behaviors and risks evolve as they grow, and Guardii adapts to meet those changing needs. Its age-appropriate protection adjusts monitoring levels while maintaining constant, 24/7 AI-powered vigilance in the background.

With 80% of online grooming cases starting in private direct messages, Guardii focuses its efforts exactly where children are most at risk, providing targeted protection where it’s needed the most.

Conclusion

Online predators often rely on five core tactics - flattery, isolation, gradual escalation, boundary testing, and probing for personal details - to exploit children. Recognizing these patterns is vital, but what truly changes the game is AI's ability to identify these behaviors in real time and act swiftly to safeguard children.

Unlike older methods like keyword filtering or manual reviews, AI tools excel at detecting and preventing online grooming by understanding context and subtle manipulation tactics. These systems can spot troubling patterns early, allowing intervention before a situation escalates. Platforms such as Guardii showcase how this proactive approach can transform online safety.

Guardii's approach to protection combines real-time threat detection with behavioral analysis, systematically addressing each grooming tactic. When a predator attempts to manipulate, the AI detects these behaviors and intervenes before harm occurs. Additionally, Guardii ensures evidence is preserved and works seamlessly with law enforcement, helping to document and report predatory actions. This process not only holds offenders accountable but also prevents them from targeting other children.

For parents concerned about digital safety, AI-powered tools like Guardii offer dependable protection. Since many grooming attempts happen through private messages, these tools focus on monitoring vulnerable spaces, providing children with the security they need online.

FAQs

How does AI detect and flag predatory grooming behaviors in online conversations?

AI works to spot predatory grooming behaviors by examining patterns in the language and actions within online chats. Through advanced machine learning, it picks up on warning signs like manipulative phrasing, coercive strategies, or an unusual interest in personal information. These tools are trained to identify distinct stages of grooming and can flag questionable interactions as they happen.

By analyzing tone, word choices, and communication styles, AI can distinguish between normal conversations and harmful ones, providing early warnings and helping to safeguard children from potential dangers.

What can parents do to support AI tools like Guardii in keeping their children safe from online predators?

Parents play a key role in keeping their kids safe online, even with AI tools like Guardii in the mix. It all starts with honest, ongoing conversations about online dangers, like predatory behavior, and teaching children how to spot and steer clear of suspicious interactions.

Establish clear rules for internet use. This might include setting limits on screen time, deciding which apps or platforms are okay to use, and using parental controls to keep an eye on their activity. Make sure to pay attention to messaging platforms to ensure they're being used responsibly. Above all, build trust with your kids by creating an environment where they feel safe talking about their online experiences without fear of being judged.

When parents combine these practical steps with the power of AI tools, they can create a solid plan to help protect their children in today’s digital landscape.

How does Guardii protect children's privacy while detecting predatory behaviors?

Guardii employs cutting-edge AI to keep harmful content at bay while respecting user privacy. It achieves this by monitoring and blocking threats without ever accessing or storing personal data. By adhering to rigorous data protection standards and maintaining clear, transparent privacy policies, Guardii strikes a balance between safety and privacy, building trust between parents and their children.